Contributed by Benedikt Koller

Original article posted on May 17, 2020

No way around it: I am what you call an “Ops guy”. In my career I admin’ed more servers than I’ve written code. Over twelve years in the industry have left their permanent mark on me. For the last two of those I’m exposed to a new beast – Machine Learning. My hustle is bringing Ops-Knowledge to ML. These are my thoughts on that.

Deploying software into production

Hundreds of thousands of companies deploy software into production every day. Every deployment mechanism has someone who built it. Whoever it was (The Ops Guy™, SRE-Teams, “Devops Engineers”), all follow tried-and-true paradigms. After all, the goal is to ship code often, in repeatable and reliable ways. Let me give you a quick primer on two of those.

Infrastructure-as-code (IaC)

Infrastructure as code, or IaC, applies software engineering rules to infrastructure management. The goal is to avoid environment drift, and to ensure idempotent operations. In plain words, read the infrastructure configuration and you’ll know exactly how the resulting environment looks like. You can rerun the provisioning without side effects, and your infrastructure has a predictable state. IaC allows for version-controlled evolution of infrastructures and quick provisioning of extra resources. It does so through declarative configurations.

Famous tools for this paradigm are Terraform, and to a large degree Kubernetes itself.

Immutable infrastructure

In conjunction with IaC, immutable infrastructure ensures the provisioned state is maintained. Someone ssh’ed onto your server? Its tainted – you have no guarantee that it still is in the identical shape to the rest of your stack. Interaction between a provisioned infrastructure and new code happens only through automation. Infrastructure, e.g. a Kubernetes cluster, is never modified after it’s provisioned. Updates, fixes and modifications are only possible through new deployments of your infrastructure.

Operational efficiency requires thorough automation and handling of ephemeral data. Immutable infrastructure mitigates config drift and snowflake server woes entirely.

ML development

Developing machine learning models works in different ways. In a worst case scenario, new models begin their “life” in a Jupyter Notebook on someones laptop. Code is not checked into git, there is no requirements file, and cells can be executed in any arbitrary order. Data exploration and preprocessing are intermingled. Training happens on that one shared VM with the NVIDIA K80, but someone messed with the CUDA drivers. Ah, and does anyone remember where I put those matplotlib-screenshots that showed the AUROC and MSE?

Getting ML models into production reliably, repeatedly and fast remains a challenge, and large data sets become a multiplying factor. The solution? Learn from our Ops-brethren.

We can extract key learnings from the evolution of infrastructure management and software deployments:

- Automate processing and provisioning

- Version-control states and instructions

- Write declarative configs

How can we apply them to a ML training flow?

Fetching data

Automate fetching of data. Declaratively define the datasource, the subset of data to use and then persist the results. Repeated experiments on the same source and subset can use the cached results.

Thanks to automation, fetching data can be rerun at any time. The results are persisted, so data can be versioned. And by reading the input configuration everyone can clearly tell what went into the experiment.

Splitting (and preprocessing data)

Splitting data can be standardized into functions.

- Splitting happens on a quota, e.g. 70% into train, 30% into eval. Data might be sorted on an index, data might be categorized.

- Splitting happens based on features/colums. Data might be categorized, Data might be sorted on an index.

- Data might require preprocessing / feature engineering (e.g. filling, standardization).

- A wild mix of the above.

Given those, we can define an interface and invoke processing through parameters – and use a declarative config. Persist the results so future experiments can warm-start.

Implementation of interfaces makes automated processing possible. The resulting train/eval datasets are versionable, and my input config is the declarative authority on the resulting state of the input dataset.

Training

Standardizing models is hard. Higher-level abstractions like Tensorflow and Keras already provide comprehensive APIs, but complex architectures need custom code injection.

A declarative config will, at least, state which version-controlled code was used. Re-runs on the same input will deliver the same results, re-runs on different inputs can be compared. Automation of training will yield a version-controllable artefact – the model – of a declared and therefore anticipatable shape.

Evaluation

Surprisingly, this is the hardest to fully automate. The dataset and individual usecase define the required evaluation metrics. However, we can stand on the shoulders of giants. Great tools like Tensorboard and the What-If-Tool go a long way. Our automation just needs to account for enough flexibility that a.) custom metrics for evaluation can be injected, and b.) raw training results are exposed for custom evaluation means.

Serving

Serving is caught between the worlds. It would be easy to claim that a trained model is a permanent artifact, like you might claim that a Docker container acts as an artifact of software development. We can borrow another learning from software developers – if you don’t understand where your code is run, you don’t understand your code.

Only by understanding how a model is served will a ML training flow ever be complete. For one, data is prone to change. A myriad of reasons might be the cause, but the result remains the same: Models need to be retrained to account for data drift. In short, continuous training is required. Through the declarative configuration of our ML flow so far we can reuse this configuration and inject new data – and iterate on those new results.

For another, preprocessing might need embedding with your model. Automation lets us apply the same preprocessing steps used in training to live data, guaranteeing identical shape of input data.

Why?

Outside academia, performance of machine learning models is measured through impact – economically, or by increased efficiency. Only reliable and consistent results are true measures for the success of applied ML. We as a new and still growing part of software engineering have to make sure of this success. And the reproducibility of success hinges on the repeatability of the full ML development lifecycle.

Originally posted on Medium by community member, Andreas Grimmer

Continuous Delivery (CD) and Runbook Automation are standard means to deploy, operate and manage software artifacts across the software life cycle. Based on our analysis of many delivery pipeline implementations, we have seen that on average seven or more tools are included in these processes, e.g., version control, build management, issue tracking, testing, monitoring, deployment automation, artifact management, incident management, or team communication. Most often, these tools are “glued together” using custom, ad-hoc integrations in order to form a full end-to-end workflow. Unfortunately, these custom ad-hoc tool integrations also exist in Runbook Automation processes.

Problem: Point-to-Point Integrations are Hard to Scale and Maintain

Not only is this approach error-prone but maintenance and troubleshooting of these integrations in all its permutations is time-intensive too. There are several factors that prevent organizations from scaling this across multiple teams:

- Number of tools: Although the great availability of different tools always allows having the appropriate tool in place, the numberof required integrations explodes.

- Tight coupling: The tool integrations are usually implemented within the pipeline, which results in a tight coupling between the pipeline and the tool.

- Copy-paste pipeline programming: A common approach we are frequently seeing is that a pipeline with a working tool integration is often used as a starting point for new pipelines. If now the API of a used tool changes, all pipelines have to catch up to stay compatible and to prevent vulnerabilities.

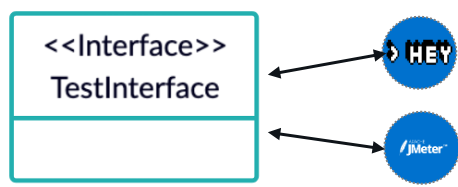

Let’s imagine an organization with hundreds of copy-paste pipelines, which all contain a hard-coded piece of code for triggering Hey load tests. Now this organization would like to switch from Hey to JMeter. Therefore, they would have to change all their pipelines. This is clearly not efficient!

Solution: Providing Standardized Interoperability Interfaces

In order to solve these challenges, we propose introducing interoperability interfaces, which allow abstract tooling in CD and Runbook Automation processes. These interfaces should trigger operations in a tool-agnostic way.

For example, a test interface could abstract different testing tools. This interface can then be used within a pipeline to trigger a test without knowing which tool is executing the actual test in the background.

These interoperability interfaces are important and this is confirmed by the fact that the Continuous Delivery Foundation has implemented a dedicated working group on Interoperability, as well as the open-source project Eiffel, which provides an event-based protocol enabling a technology-agnostic communication especially for Continuous Integration tasks.

Use Events as Interoperability Interfaces

By implementing these interoperability interfaces, we define a standardized set of events. These events are based on CloudEvents and allow us to describe event data in a common way.

The first goal of our standardization efforts is to define a common set of CD and runbook automation operations. We identified the following common operations (please let us know if we are missing important operations!):

- Operations in CD processes: deployment, test, evaluation, release, rollback

- Operations in Runbook Automation processes: problem analysis, execution of the remediation action, evaluation, and escalation/resolution notification

For each of these operations, an interface is required, which abstracts the tooling executing the operation. When using events, each interface can be modeled as a dedicated event type.

The second goal is to standardize the data within the event, which is needed by the tools in order to trigger the respective operation. For example, a deployment tool would need the information of the artifact to be deployed in the event. Therefore, the event can either contain the required resources (e.g. a Helm chart for k8s) or a URI to these resources.

We already defined a first set of events https://github.com/keptn/spec, which is specifically designed for Keptn — an open-source project implementing a control plane for continuous delivery and automated operations. We know that these events are currently too tailored for Keptn and single tools. So, please

Let us Work Together on Standardizing Interoperability Interfaces

In order to work on a standardized set of events, we would like to ask you to join us in Keptn Slack.

We can use the #keptn-spec channel in order to work on standardizing interoperability interfaces, which eventually are directly interpreted by tools and will make custom tool integrations obsolete!

From Dailymotion, a French video-sharing technology platform with over 300 million unique monthly users

At Dailymotion, we are hosting and delivering premium video content to users all around the world. We are building a large variety of software to power this service, from our player or website to our GraphQL API or ad-tech platform. Continuous Delivery is a central practice in our organization, allowing us to push new features quickly and in an iterative way.

We are early adopters of Kubernetes: we built our own hybrid platform, hosted both on-premise and on the cloud. And we heavily rely on Jenkins to power our “release platform”, which is responsible for building, testing, packaging and deploying all our software. Because we have hundreds of repositories, we are using Jenkins Shared Libraries to keep our pipeline files as small as possible. It is an important feature for us, ensuring both a low maintenance cost and a homogeneous experience for all developers – even though they are working on projects using different technology stacks. We even built Gazr, a convention for writing Makefiles with standard targets, which is the foundation for our Jenkins Pipelines.

In 2018, we migrated our ad-tech product to Kubernetes and took the opportunity to set up a Jenkins instance in our new cluster – or better yet move to a “cloud-native” alternative. Jenkins X was released just a few months before, and it seemed like a perfect match for us:

- It is built on top and for Kubernetes.

- At that time – in 2018 – it was using Jenkins to run the pipelines, which was good news given our experience with Jenkins.

- It comes with features such as preview environments which are a real benefit for us.

- And it uses the Gitops practice, which we found very interesting because we love version control, peer review, and automation.

While adopting Jenkins X we discovered that it is first a set of good practices derived from the best performing teams, and then a set of tools to implement these practices. If you try to adopt the tools without understanding the practices, you risk fighting against the tool because it won’t fit your practices. So you should start with the practices. Jenkins X is built on top of the practices described in the Accelerate book, such as micro-services and loosely-coupled architecture, trunk-based development, feature flags, backward compatibility, continuous integration, frequent and automated releases, continuous delivery, Gitops, … Understanding these practices and their benefits is the first step. After that, you will see the limitations of your current workflow and tools. This is when you can introduce Jenkins X, its workflow and set of tools.

We’ve been using Jenkins X since the beginning of 2019 to handle all the build and delivery of our ad-tech platform, with great benefits. The main one being the improved velocity: we used to release and deploy every two weeks, at the end of each sprint. Following the adoption of Jenkins X and its set of practices, we’re now releasing between 10 and 15 times per day and deploying to production between 5 and 10 times per day. According to the State of DevOps Report for 2019, our ad-tech team jumped from the medium performers’ group to somewhere between the high and elite performers’ groups.

But these benefits did not come for free. Adopting Jenkins X early meant that we had to customize it to bypass its initial limitations, such as the ability to deploy to multiple clusters. We’ve detailed our work in a recent blog post, and we received the “Most Innovative Jenkins X Implementation” Jenkins Community Award in 2019 for it. It’s important to note that most of the issues we found have been fixed or are being fixed. The Jenkins X team has been listening to the community feedback and is really focused on improving their product. The new Jenkins X Labs is a good example.

As our usage of Jenkins X grows, we’re hitting more and more the limits of the single Jenkins instance deployed as part of Jenkins X. In a platform where every component has been developed with a cloud-native mindset, Jenkins is the only one that has been forced into an environment for which it was not built. It is still a single point of failure, with a much higher maintenance cost than the other components – mainly due to the various plugins.

In 2019, the Jenkins X team started to replace Jenkins with a combination of Prow and Tekton. Prow (or Lighthouse) is the component which handles the incoming webhook events from GitHub, and what Jenkins X calls the “ChatOps”: all the interactions between GitHub and the CI/CD platform. Tekton is a pipeline execution engine. It is a cloud-native project built on top of Kubernetes, fully leveraging the API and capabilities of this platform. No single point of failure, no plugins compatibility nightmare – yet.

Since the beginning of 2020, we’ve started an internal project to upgrade our Jenkins X setup – by introducing Prow and Tekton. We saw immediate benefits:

- Faster scheduling of pipelines “runners” pods – because all components are now Kubernetes-native components.

- Simpler pipelines – thanks to both the Jenkins X Pipelines YAML syntax and the ability to easily decouple a complex pipeline in multiple small ones that are run concurrently.

- Lower maintenance cost.

While replacing the pipeline engine of Jenkins X might seem like an implementation detail, in fact, it has a big impact on the developers. Everybody is used to see the Jenkins UI as the CI/CD UI – the main entry point, the way to manually restart pipelines executions, to access logs and test results. Sure, there is a new UI and a real API with an awesome CLI, but the new UI is not finished yet, and some people still prefer to use web browsers and terminals. Leaving the Jenkins Plugins ecosystem is also a difficult decision because some projects heavily rely on a few plugins. And finally, with the introduction of Prow (Lighthouse) the Github workflow is a bit different, with Pull Requests merges being done automatically, instead of people manually merging when all the reviews and automated checks are green.

So if 2019 was the year of Jenkins X at Dailymotion, 2020 will definitely be the year of Tekton: our main release platform – used by almost all our projects except the ad-tech ones – is still powered by Jenkins, and we feel more and more its limitations in a Kubernetes world. This is why we plan to replace all our Jenkins instances with Tekton, which was truly built for Kubernetes and will enable us to scale our Continuous Delivery practices.

By Tracy Ragan, CEO of DeployHub, CD Foundation Board Member

Microservice pipelines are different than traditional pipelines. As the saying goes…

“The more things change; the more things stay the same.”

As with every step in the software development evolutionary process, our basic software practices are changing with Kubernetes and microservices. But the basic requirements of moving software from design to release remain the same. Their look may change, but all the steps are still there. In order to adapt to a new microservices architecture, DevOps Teams simply need to understand how our underlying pipeline practices need to shift and change shape.

Understanding Why Microservice Pipelines are Different

The key to understanding microservices is to think ‘functions.’ With a microservice environment the concept of an ‘application’ goes away. It is replaced by a grouping of loosely coupled services connected via APIs at runtime, running inside of containers, nodes and pods. The microservices are reused across teams increasing the need for improved organization (Domain Driven Design), collaboration, communication and visibility.

The biggest change in microservice pipeline is having a single microservice used by multiple application teams independently moving through the life cycle. Again, one must stop thinking ‘application’ and think instead think ‘functions’ to fully appreciate the oncoming shift. And remember, multiple versions of a microservice could be running in your environments at the same time.

Microservices are immutable. You don’t ‘copy over’ the old one, you deploy a new version. When you deploy a microservice, you create a Kubernetes deployment YAML file that defines the Label and the version of the image.

In the above example, our Label is dh-ms-general. When a microservice Label is reused for a new container image, Kubernetes stops using the old image. But in some cases, a second Label may be used allowing both services to be running at the same time. This is controlled by the configuration of your ingresses. Our new pipeline process must incorporate these new features of our modern architecture.

Comparing Monolithic to Microservice Pipelines

What does your life cycle pipeline look like when we manage small functions vs. a monolithic applications running in a modern architecture? Below is a comparison for each category and their potential shift for supporting a microservice pipeline.

Change Request

Monolithic:

Logging a user problem ticket, enhancement request or anomaly based on an application.

Microservices:

This process will remain relatively un-changed in a microservice pipeline. Users will continue to open tickets for bugs and enhancements. The difference will be sorting out which microservice needs the update, and which version of the microservice the ticket was opened against. Because a microservice can be used by multiple applications, dependency management and impact analysis will become more critical for helping to determine where the issue lies.

Version Control

Monolithic:

Tracking changes in source code content. Branching and merging updates allowing multiple developers to work on a single file.

Microservices:

While versioning your microservice source code will still be done, your source code will be smaller, 100-300 lines of code versus 1,000 – 3,000 lines of code. This impacts the need for branching and merging. The concept of merging ‘back to the trunk’ is more of a monolithic concept, not a microservice concept. And how often will you branch code that is a few hundred lines long?

Artifact Repository

Monolithic:

Originally built around Maven, an artifact repository provides a central location for publishing jar files, node JS Packages, Java scripts packages, docker images, python modules. At the point in time where you run your build your package manager (maven, NPM, PIP) will perform the dependency management for tracking transitive dependencies.

Microservices:

Again, these tools supported monolithic builds and solved dependency management to resolve compile/link steps. We move away from monolithic builds, but we still need to build our container and resolve our dependencies. These tools will help us build containers by determining the transitive dependencies need for the container to run.

Builds

Monolithic:

Executes a serial process for calling compilers and linkers to translate source code into binaries (Jar, War, Ear, .Exe, .dlls, docker images). Common languages that support the build logic includes Make, Ant, Maven, Meister, NPM, PIP, and Docker Build. The build calls on artifact repositories to perform dependency management based on what versions of libraries have been specified by the build script.

Microservices:

For the most part, builds will look very different in a microservice pipeline. A build of a microservice will involve creating a container image and resolving the dependencies needed for the container to run. You can think of a container image to be our new binary. This will be a relatively simple step and not involve a monolithic compile/link of an entire application. It will only involve a single microservice. Linking is done at runtime with the restful API call coded into the microservice itself.

Software Configuration Management (SCM)

Monolithic:

The build process is the central tool for performing configuration management. Developers setup their build scripts (POM files) to define what versions of external libraries they want to include in the compile/link process. The build performs configuration management by pulling code from version control based on a ‘trunk’ or ‘branch. A Software Bill of Material can be created to show all artifacts that were used to create the application.

Microservices:

Much of what we use to do for configuring our application occurred at the software ‘build.’ But ‘builds’ as we know them go away in a microservice pipeline. This is where we made very careful decisions about what versions of source code and libraries we would use to build a version of our monolithic application. For the most part, the version and build configuration shifts to runtime with microservices. While the container image has a configuration, the broader picture of the configuration happens at run-time in the cluster via the APIs.

In addition, our SCM will begin to bring in the concept of Domain Driven Design where you are managing an architecture based on the microservice ‘problem space.’ New tooling will enter the market to help with managing your Domains, your logical view of your application and to track versions of applications to versions of services. In general, SCM will become more challenging as we move away from resolving all dependencies at the compile/link step and must track more of it across the pipeline.

Continuous Integration (CI)

Monolithic:

CI is the triggered process of pulling code and libraries from version control and executing a Build based on a defined ‘quiet time.’ This process improved development by ensuring that code changes were integrated as frequently as possible to prevent broken builds, thus the term continuous integration.

Microservices:

Continuous Integration was originally adopted to keep us re-compiling and linking our code as frequently as possible in order to prevent the build from breaking. The goal was to get to a clean ’10-minute build’ or less. With microservices, you are only building a single ‘function.’ This means that an integration build is no longer needed. CI will eventually go away, but the process of managing a continuous delivery pipeline will remain important with a step that creates the container.

Code Scanning

Monolithic:

Code scanners have evolved from looking at coding techniques for memory issues and bugs to scanning for open source library usage, licenses and security problems.

Microservices:

Code scanners will continue to be important in a microservice pipeline but will shift to scanning the container image more than the source. Some will be used during the container build focusing on scanning for open source libraries and licensing while others will focus more on security issues with scanning done at runtime.

Continuous Testing

Monolithic:

Continuous testing was born out of test automation tooling. These tools allow you to perform automated test on your entire application including timings for database transactions. The goal of these tools is to improve both the quality and speed of the testing efforts driven by your CD workflow.

Microservices:

Testing will always be an important part of the life cycle process. The difference with microservices will be understanding impact and risk levels. Testers will need to know what applications depend on a version of a microservice and what level of testing should be done across applications. Test automation tools will need to understand microservice relationships and impact. Testing will grow beyond testing a single application and instead will shift to testing service configurations in a cluster.

Security

Monolithic:

Security solutions allow you to define or follow a specific set of standards. They include code scanning, container scanning and monitoring. This field has grown into the DevSecOps movement where more of the security activities are being driven by Continuous Delivery.

Microservices:

Security solutions will shift further ‘left’ adding more scanning around the creation of containers. As containers are deployed, security tools will begin to focus on vulnerabilities in the Kubernetes infrastructure as they relate to the content of the containers.

Continuous Delivery Orchestration (CD)

Monolithic:

Continuous Delivery is the evolution of continuous integration triggering ‘build jobs’ or ‘workflows’ based on a software application. It auto executes workflow processes between development, testing and production orchestrating external tools to get the job done. Continuous Delivery calls on all players in the lifecycle process to execute in the correct order and centralizes their logs.

Microservices:

Let’s start with the first and most obvious difference between a microservice pipeline and a monolithic pipeline. Because microservices are independently deployed, most organizations moving to a microservice architecture tell us they use a single pipeline workflow for each microservice. Also, most companies tell us that they start with 6-10 microservices and grow to 20-30 microservices per traditional application. This means you are going to have hundreds if not thousands of workflows. CD tools will need to include the ability to template workflows allowing a fix in a shared template to be applied to all child workflows. Managing hundreds of individual workflows is not practical. In addition, plug-ins need to be containerized and decoupled from a version of the CD tool. And finally, look for actions to be event driven, with the ability for the CD engine to listen to multiple events, run events in parallel and process thousands of microservices through the pipeline.

Continuous Deployments

Monolithic:

This is the process of moving artifacts (binaries, containers, scripts, etc.) to the physical runtime environments on a high frequency basis. In addition, deployment tools track where an artifact was deployed along with audit information (who, where, what) providing core data for value stream management. Continuous deployment is also referred to as Application Release Automation.

Microservices:

The concept of deploying an entire application will simply go away. Instead, deployments will be a mix of tracking the Kubernetes deployment YAML file with the ability to manage the application’s configuration each time a new microservice is introduced to the cluster. What will become important is the ability to track the ‘logical’ view of an application by associating which versions of the microservices make up an application. This is a big shift. Deployment tools will begin generating the Kubernetes YAML file removing it from the developer’s to-do list. Deployment tools will automate the tracking of versions of the microservice source to the container image to the cluster and associated applications to provide the required value stream reporting and management.

Conclusion

As we shift from managing monolithic applications to microservices, we will need to create a new microservice pipeline. From the need to manage hundreds of workflows in our CD pipeline, to the need for versioning microservices and their consuming application versions, much will be different. While there are changes, the core competencies we have defined in traditional CD will remain important even if it is just a simple function that we are now pushing independently across the pipeline.

About the Author

Tracy Ragan is CEO of DeployHub and serves on the Continuous Delivery Foundation Board. She is a microservice evangelist with expertise in software configuration management, builds and release. Tracy was a consultant to Wall Street firms on build and release management for 7 years prior to co-founding OpenMake Software in 1995. She was a founding member of the Eclipse organization and served on the board for 5 years. She is a recognized leader and has been published in multiple industry publications as well as presenting to audiences at industry conferences. Tracy co-founded DeployHub in 2018 to serve the microservice development community.