Contibuted by Anonymous for the CDF Newsletter – August 2020 Article

Subscribe to the Newsletter

Introduction

DevOps or DevSecOps is popular in all the quality-related meetings or customer meetings. At times, it is considered as a value add and not a service across the world. The combination of people, processes, and tools brings the entire culture change transformation to reality. DevOps is a software development practice that focuses on culture change and brings value to the end-to-end process of Application Lifecycle Management. DevOps pipeline or CI/CD pipeline is a popular word used. What does it mean? The pipeline includes different operations involved in Application Lifecycle Management starting from development to deployment in different environments. Continuous Integration (CI) and Continuous Delivery (CD) are popular DevOps practices. Continuous Integration involves development, code analysis, unit testing, code coverage calculation, and build activities which are automated using various tools. Continuous Delivery is all about deploying your package into different environments so end-users can access it. There are different ways to create a pipeline/orchestration that involves Continuous Integration, Continuous Delivery, Continuous Testing, Continuous Deployment, Continuous Monitoring and other DevOps Practices. Each tool provides different ways to create a pipeline. This article describes different approaches to create a pipeline in Jenkins.

The rule of three is a very famous writing principle. The trio of sections or bullet points helps to stick the information in the head better. Remember, Cloud Service Models: 1) Infrastructure as a Service 2) Platform as a Service 3) Software as a Service. These are the service models we remember the most. Later the number grew with a lot of services. But this is the example I immediately recall. In this article, fortunately, we have 3 ways to create a pipeline while considering Pipeline as Code. So we will try to map these 3 ways with three different types of ice creams and we will explain each in three sections. Let’s see if it becomes interesting.

Ice cream is such a delight; it is cold, sweet, creamy, and just amazing. Isn’t it? I usually have multiple family packs in my refrigerator and that shows my eternal love for ice cream. I Love Vanilla, Ben & Jerry’s Non-Dairy Ice Cream (I ate at Basel Airport in Switzerland and I still have that carton), and Italy’s gelato that I ate once in Kochi (Visit my Introduction Blog as a CDF Ambassador where all the photographs are taken at the shop where I ate gelato for the first time) in descending order.

Why am I talking about ice creams? Because I would love to map my love for ice cream with my love for Jenkins Pipelines. It is customary to have some overview of Jenkins even though we know Jenkins is one of the most popular Automation servers for implementing DevOps Practices.

Jenkins is an open-source automation server that provides integration with existing code analysis tools, build tools, testing tools, cloud and containers, governance and monitoring tools for application lifecycle management to automate the end-to-end process based on requirements. It helps an organization for DevOps practices implementation.

Jenkins 2.0 and later versions have built-in support for delivery pipelines. Jenkins 2.x has improved user experience and it is fully backwards compatible. In today’s competitive market, all organizations compete to release better quality products with faster time to market.

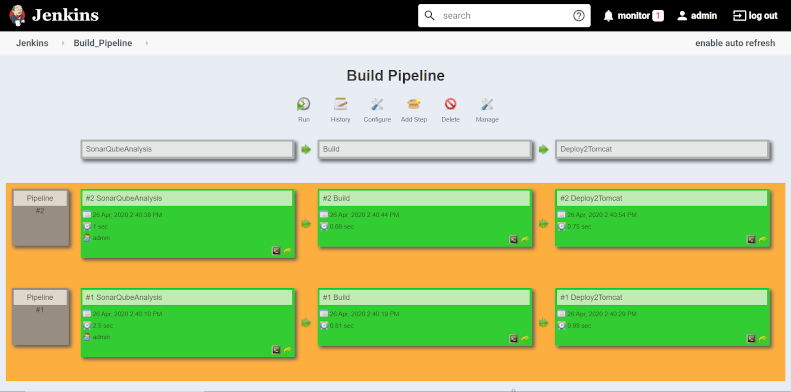

Earlier Jenkins was known for Continuous Integration even though it used to support plugins for automated deployment or Continuous Delivery. Build-pipeline plugin is famous for creating the pipeline using Upstream and Downstream jobs in Jenkins.

After Jenkins 2.0, the situation has changed. Let’s try to compare Pipelines in Jenkins with the ice creams I love the most.

Pipeline as Code using Jenkinsfile

Pipeline as Code is very popular nowadays to create end-to-end pipelines for automating application lifecycle management phases. Your Orchestration or Pipeline remains in the code repository and hence you can manage versions of it and it is very easy to retain your changes. In Jenkins, we can create a Pipeline in three ways:

- Scripted Pipeline

- Declarative Pipeline

- Blue Ocean

Let’s get some familiarity with all three by mapping them with some of my favourite ice creams.

Vanilla Ice Cream: Scripted Pipeline

The scripted pipeline has strictly groovy based syntax. In this pipeline, we have more control over the script and it is almost as good as script programming using conditional flows or loops. It helps the development team to create extensive and complex pipelines. However, this results in a situation where not all can work or understand these pipelines and hence the learning curve is more difficult. Vanilla is the most commonly used ice cream because it can be flavored and utilized in many ways. Similarly, Scripted Pipeline can be very flexible as programming like syntax is used and it also requires a lot of programming knowledge to create a Pipeline. There are cases where the script is utilized in the declarative pipeline as well as there are some limitations to it.

Use case

Scripted Pipeline uses Groovy script to create an end-to-end pipeline or orchestration for configuring Continuous Integration, Continuous Delivery, Continuous Testing, Continuous Deployment, Resource Management, Governance and Continuous Monitoring.

Components

In Scripted Pipeline, one or more node blocks are used to create a pipeline.

node {

/* Stages and Steps */

}

Node block is an important part of Scripted Pipeline where it defines the end-to-end process of application lifecycle management that is being automated. A node/agent is a physical machine / virtual machine/container which is part of the Jenkins automation environment and is capable of executing a Pipeline.

node {

stage('SCA') {

// steps

}

stage('CI') {

// steps

}

stage('CD') {

// steps

}

}

Stage block divides different tasks which are logically together such as Continuous Integration stage might include tasks such as code analysis, unit test execution, code coverage calculation, and build. Step represents a task in a stage. On stage can have one or many tasks.

Experience

Scripted Pipeline was the first exposure to Pipeline as Code as a DevOps Engineer around 2016 for me. It was everywhere and I wanted to give it a try as I started my pipeline creation using a build pipeline plugin. Soon, I realized that it is more useful for maintenance. The entire team liked the idea and implementation but it took us time to get familiar with it. It was difficult to implement Scripted Pipeline at a large scale, so we kept using a build pipeline plugin for a while.

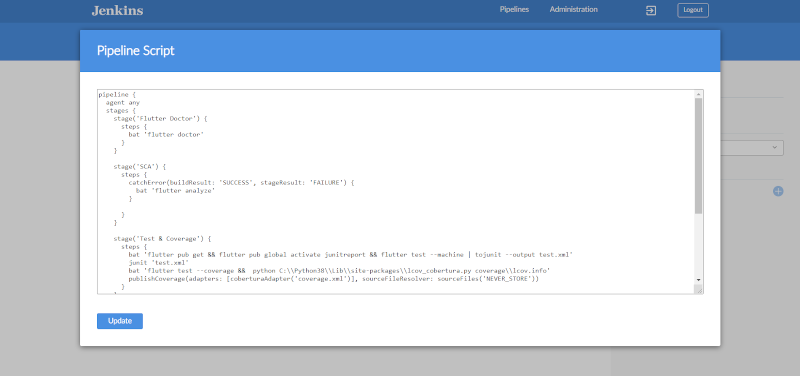

Ben & Jerry’s Non-Dairy Ice Cream: Declarative Pipeline

Ben & Jerry’s Non-Dairy Ice Cream is Banana flavored with Chocolate Chunks & Walnuts. It is very specific in its making. It’s a Ben & Jerry’s classic! Similarly, Declarative Pipelines are very specific. The Declarative Pipeline is a feature where the pipeline is written in a Jenkinsfile which is stored into a code repository such as Git, GitHub, or Bitbucket. A declarative pipeline is not fully flexible compared to Scripted Pipeline. There are various tasks for which scripts are needed in the Declarative Pipeline as well.

Use case

Declarative Pipelines uses Domain Specific Language to create an end-to-end pipeline or orchestration for configuring Continuous Integration, Continuous Delivery, Continuous Testing, Continuous Deployment, Resource Management, Governance and Continuous Monitoring. Comparatively, the Declarative Pipeline is easy to understand and learn.

Components

Pipeline block is used in a Declarative Pipeline where it defines the end-to-end process of application lifecycle management that is being automated.

pipeline {

/* Stages and Steps */

}

An agent is a physical machine/virtual machine/container which is part of the Jenkins automation environment and is capable of executing a Pipeline.

pipeline {

agent any

stages {

stage('SCA') {

steps {

//

}

}

stage('CI') {

steps {

//

}

}

stage('CD') {

steps {

//

}

}

}

}

Stage block divides different tasks which are logically together such as Continuous Integration stage might include tasks such as code analysis, unit test execution, code coverage calculation, and build. Step represents a task in a stage. One stage can have one or many tasks.

Experience

The first reaction to the Declarative Pipeline was better than what we thought. We loved simple language syntax and completed our proof of concept successfully and realized this is much more useful, easy to understand, and has a quick learning curve. We came across the concept of the multi-branch pipeline at the same time and realized how much time and effort it can save with managing customization.

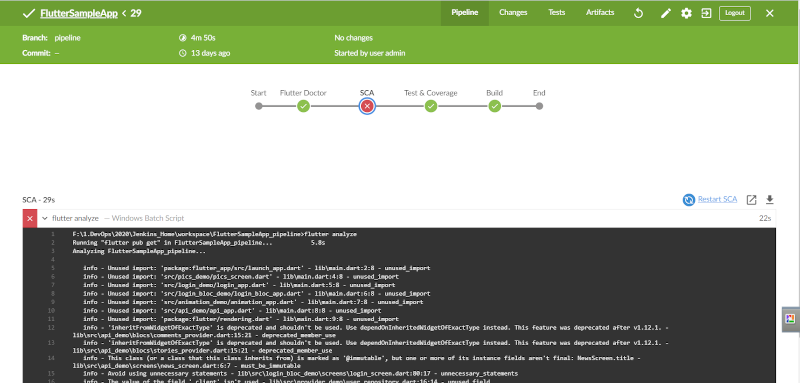

Italy’s Gelato: Blue Ocean – Innovative User Experience

We went for a Customer presentation at Kochi, India. At night we visited CreamCraft, Premium Italian Ice Cream Store.

I selected the flavour: HONEY PEANUT CARAMEL gelato. It’s honey paired with toasty peanuts and gooey caramel and I loved it from the bottom of my heart.

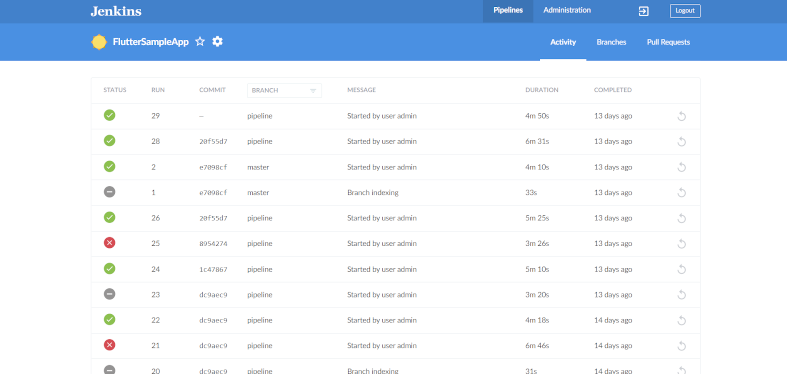

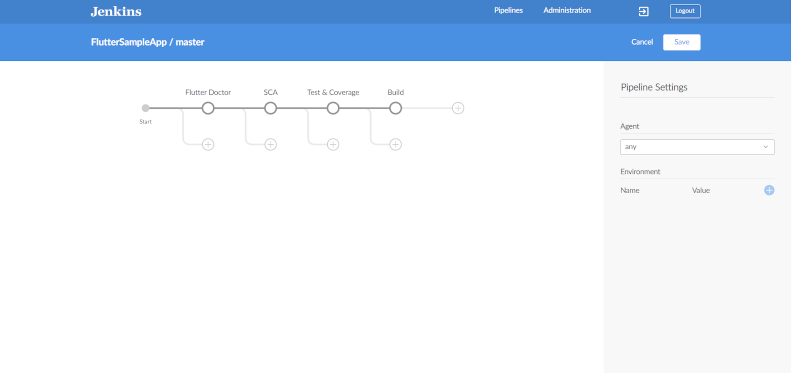

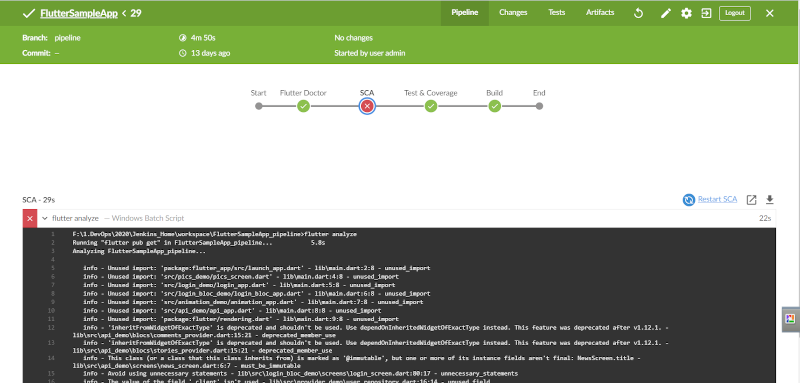

I loved the store and later found out that they continuously do research and innovation to improve their gelato. Similarly, I loved the innovation from Jenkins: Blue Ocean. Thanks to it, I no longer need to learn script initially. It helps me to create a Declarative Pipeline script from a UI. Yes, you read that right. Jenkins pioneered a trend of ease of use and adoption. Blue Ocean provides an easy way to create a Declarative Pipeline using new user experience available in the Blue Ocean dashboard. It is like creating a script based on selecting components or steps or tasks.

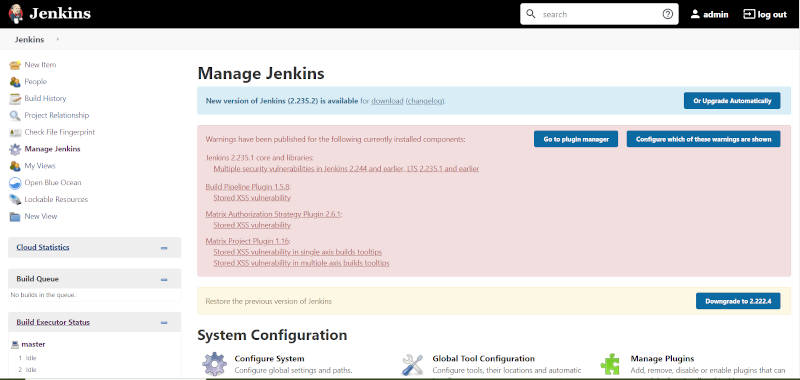

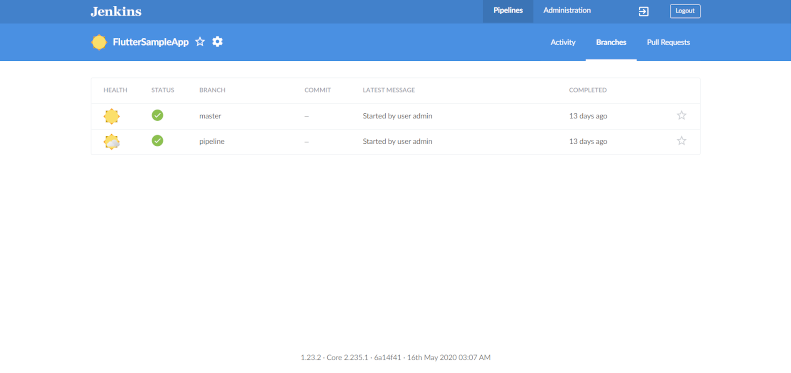

In Normal Jenkins setup, we need to install the Blue Ocean Plugin. Go to Manage Jenkins

Use case

Blue Ocean provides a user interface or UI to create a declarative end-to-end pipeline syntax or orchestration for configuring Continuous Integration, Continuous Delivery, Continuous Testing, Continuous Deployment, Resource Management, Governance, and Continuous Monitoring. Comparatively, the Declarative Pipeline is easy to understand and to learn. As a user, you don’t need knowledge of any scripting language. However, if you’re good with scripting, it’s a bonus!

Components

Blue Ocean has many sections that help you to start your pipeline quickly.

Experience

Blue Ocean was the talk of the town in webinars and blogs as soon as it was introduced. Digital transformation and culture transformation processes are like a roller coaster ride and not often you can change things in a Big-Bang kind of way. Phase-wise implementation is always better. We decided to first perform a proof of concept and that’s when we realized the potential of this approach for beginners. Our next task was to bring it into projects where it was a complete greenfield and we succeeded in our approach. We used a Declarative Pipeline created using Blue Ocean in every new project by keeping the “Blue Ocean First” strategy. However, there were times where due to plugin related issues and other hurdles we had to use other approaches but as I said, Blue Ocean First was our strategy.

Now that we are more experienced, we tend to use Jenkinfile directly because we’re comfortable with the syntax of Declarative Pipeline. Blue Ocean is a great feature when you want to learn how to create a Declarative Pipeline. In my experience, it is easier to use Blue Ocean using the Docker image. It’ll help you get started with the installation and configuration with lighting speed.

Conclusion

Most of the tools support the Pipeline as Code concept, such as Azure DevOps where you can create azure-pipelines.yaml. In Azure DevOps, we can create a build and release pipeline using tasks and it has recently come up with a multi-stage pipeline. However, Jenkins is in a mature stage of Pipeline as Code Implementation for of following reasons:

- Ease of Use

- Easy Learning Curve

- Clear and defined roadmap of future implementation

- End-to-end Pipeline using Blue Ocean or Declarative Syntax

- Supports Scripted Syntax as well

For more information, watch this Declarative Pipelines with Jenkins video.