Originally posted on the Armory blog by German Muzquiz

Spinnaker Operator is now Beta!

With Spinnaker Operator, define all the configurations of Spinnaker in native Kubernetes manifest files, as part of the Kubernetes kind “SpinnakerService” defined in its own Custom Resource Definition (CRD). With this approach, you can customize, save, deploy and generally manage Spinnaker configurations in a standard Kubernetes workflow for managing manifests. No need to learn a new CLI like Halyard, or worry about how to run that service.

The Spinnaker Operator has two flavors to choose from, depending on which Spinnaker you want to use: Open Source or Armory Spinnaker.

With the Spinnaker Operator, you can:

Additionally, Spinnaker Operator has some exclusive new features not available with other deployment methods like Halyard:

Let’s look at an example workflow.

Assuming you have stored SpinnakerService manifests under source control, you have a pipeline in Spinnaker to apply these manifests automatically on source control pushes (Spinnaker deploying Spinnaker) and you want to add a new Kubernetes account:

apiVersion: spinnaker.armory.io/v1alpha2

kind: SpinnakerService

metadata:

name: spinnaker

spec:

spinnakerConfig:

config:

version: 2.17.1 # the version of Spinnaker to be deployed

persistentStorage:

persistentStoreType: s3

s3:

bucket: acme-spinnaker

rootFolder: front50

+ providers:

+ kubernetes:

+ enabled: true

+ accounts:

+ - name: kube-staging

+ requiredGroupMembership: []

+ providerVersion: V2

+ permissions: {}

+ dockerRegistries: []

+ configureImagePullSecrets: true

+ cacheThreads: 1

+ namespaces: []

+ omitNamespaces: []

+ kinds: []

+ omitKinds: []

+ customResources: []

+ cachingPolicies: []

+ oAuthScopes: []

+ onlySpinnakerManaged: false

+ kubeconfigFile: encryptedFile:k8s!n:spinnaker-secrets!k:kube-staging-kubeconfig # secret name: spinnaker-secrets, secret key: kube-staging-kubeconfig

+ primaryAccount: kube-staging

We hope that the Spinnaker Operator will make installing, configuring and managing Spinnaker easier and more powerful. We’re enhancing Spinnaker iteratively, and welcome your feedback.

Get OSS Spinnaker Operator (documentation)

Get Armory Spinnaker Operator (documentation)

Interested in learning more about the Spinnaker Operator? Reach out to us here or on Spinnaker Slack – we’d love to chat!

You may have heard that Tekton Pipelines is now beta! That’s not beta like the video format but beta like Kubernetes! Okay I’ll stop trying to make jokes, because compatibility is no laughing matter for folks who want to build on top of and use Tekton, and that’s why we’ve declared beta, so that you can feel more confident in using it.

So what does beta mean exactly? It means for Tekton what it means for Kubernetes, and it boils down to two things:

You might be wondering what “the API” means in this context – good question! It’s the specifications of the CRDs themselves and runtime details like the special directories that Tekton makes.

Not all of Tekton is beta however! Right now it’s just Tekton Pipelines and it’s only the following CRDs:

This means that other types that you might like, such as Conditions and PipelineResources (see the next section!) are still alpha and don’t (yet!) have the same beta level guarantees.

You can always refer to our API compatibility docs in our repo if you forget!

What about them indeed! If you are part of the Tekton community, you’ll know that we keep going back and forth on our love/hate-able PipelineResources – the feature you love until it doesn’t work.

A few months ago, our “difficult to understand, hard to debug” friend was challenged by the community: what would the Tekton world look like without PipelineResources? And when we went on that journey, we discovered features which PipelineResources gave us which were super useful on their own:

So we focused on adding those features and brought them to beta. In the meantime, we keep asking the question: do we still need PipelineResources? And what would they look like if redesigned with workspaces and results? We’re still asking those questions and that’s why PipelineResources aren’t beta (yet)!

We know some users really love them: “There are dozens of us,” – @dlorenc. So we haven’t given up on them yet, and there are some things that you just still can’t do well without them: for example, how do you consistently represent artifacts such as images moving through Pipelines? You can’t! So the investigation continues.

In the meantime, we’ve made Task equivalents of some of our PipelineResources in the Tekton catalog, such as PullRequests, GCS, and git.

Hooray! Our shiny new site is live! Right this way -> https://tekton.dev/

Tekton Documentation is now hosted on the website at https://tekton.dev/docs/. And interactive tutorials are hosted at https://tekton.dev/try/. There is just one interactive tutorial hosted right now but more are in process to get published, so watch this space!

We’re hard at work on more nifty Tekton stuff to make your CI/CD Pipelines more powerful and more portable by achieving Tekton’s mission:

Be the industry-standard, cloud-native CI/CD platform components and ecosystem.

Check out more on our mission and our 2020 roadmap in our community repo.

Thanks to all of the many amazing contributors who have gotten us to this point! The list below is people credited in Tekton Pipelines release notes, but for the complete list of everyone contributing to Tekton check out our devstats!

Tekton Pipelines, the core component of the Tekton project, is moving to beta status with the release of v0.11.0 this week. Tekton is an open source project creating a cloud-native framework you can use to configure and run continuous integration and continuous delivery (CI/CD) pipelines within a Kubernetes cluster.

Tekton development began as Knative Build before becoming a founding project of the CD Foundation under the Linux Foundation last year.

The Tekton project follows the Kubernetes deprecation policies. With Tekton Pipelines upgrading to beta, most Tekton Pipelines CRDs (Custom Resource Definition) are now at beta level. This means overall beta level stability can be relied on. Please note, Tekton Triggers, Tekton Dashboard, Tekton Pipelines CLI and other components are still alpha and may continue to evolve from release to release.

Tekton encourages all Tekton projects and users to migrate their integrations to the new apiVersion. Users of Tekton can see the migration guide on how to migrate from v1alpha1 to v1beta1.

Tekton is in its second year of development and is currently being used by both free and commercial offerings by multiple companies.

Now is a great time to contribute. There are many areas where you can jump in. For example, the Tekton Task Catalog allows you to share and reuse the components that make up your Pipeline. You can set a Cluster scope, and make tasks available to all users in a namespace, or you can set a Namespace scope, and make it usable only within a specific namespace.

From Dailymotion, a French video-sharing technology platform with over 300 million unique monthly users

At Dailymotion, we are hosting and delivering premium video content to users all around the world. We are building a large variety of software to power this service, from our player or website to our GraphQL API or ad-tech platform. Continuous Delivery is a central practice in our organization, allowing us to push new features quickly and in an iterative way.

We are early adopters of Kubernetes: we built our own hybrid platform, hosted both on-premise and on the cloud. And we heavily rely on Jenkins to power our “release platform”, which is responsible for building, testing, packaging and deploying all our software. Because we have hundreds of repositories, we are using Jenkins Shared Libraries to keep our pipeline files as small as possible. It is an important feature for us, ensuring both a low maintenance cost and a homogeneous experience for all developers – even though they are working on projects using different technology stacks. We even built Gazr, a convention for writing Makefiles with standard targets, which is the foundation for our Jenkins Pipelines.

In 2018, we migrated our ad-tech product to Kubernetes and took the opportunity to set up a Jenkins instance in our new cluster – or better yet move to a “cloud-native” alternative. Jenkins X was released just a few months before, and it seemed like a perfect match for us:

While adopting Jenkins X we discovered that it is first a set of good practices derived from the best performing teams, and then a set of tools to implement these practices. If you try to adopt the tools without understanding the practices, you risk fighting against the tool because it won’t fit your practices. So you should start with the practices. Jenkins X is built on top of the practices described in the Accelerate book, such as micro-services and loosely-coupled architecture, trunk-based development, feature flags, backward compatibility, continuous integration, frequent and automated releases, continuous delivery, Gitops, … Understanding these practices and their benefits is the first step. After that, you will see the limitations of your current workflow and tools. This is when you can introduce Jenkins X, its workflow and set of tools.

We’ve been using Jenkins X since the beginning of 2019 to handle all the build and delivery of our ad-tech platform, with great benefits. The main one being the improved velocity: we used to release and deploy every two weeks, at the end of each sprint. Following the adoption of Jenkins X and its set of practices, we’re now releasing between 10 and 15 times per day and deploying to production between 5 and 10 times per day. According to the State of DevOps Report for 2019, our ad-tech team jumped from the medium performers’ group to somewhere between the high and elite performers’ groups.

But these benefits did not come for free. Adopting Jenkins X early meant that we had to customize it to bypass its initial limitations, such as the ability to deploy to multiple clusters. We’ve detailed our work in a recent blog post, and we received the “Most Innovative Jenkins X Implementation” Jenkins Community Award in 2019 for it. It’s important to note that most of the issues we found have been fixed or are being fixed. The Jenkins X team has been listening to the community feedback and is really focused on improving their product. The new Jenkins X Labs is a good example.

As our usage of Jenkins X grows, we’re hitting more and more the limits of the single Jenkins instance deployed as part of Jenkins X. In a platform where every component has been developed with a cloud-native mindset, Jenkins is the only one that has been forced into an environment for which it was not built. It is still a single point of failure, with a much higher maintenance cost than the other components – mainly due to the various plugins.

In 2019, the Jenkins X team started to replace Jenkins with a combination of Prow and Tekton. Prow (or Lighthouse) is the component which handles the incoming webhook events from GitHub, and what Jenkins X calls the “ChatOps”: all the interactions between GitHub and the CI/CD platform. Tekton is a pipeline execution engine. It is a cloud-native project built on top of Kubernetes, fully leveraging the API and capabilities of this platform. No single point of failure, no plugins compatibility nightmare – yet.

Since the beginning of 2020, we’ve started an internal project to upgrade our Jenkins X setup – by introducing Prow and Tekton. We saw immediate benefits:

While replacing the pipeline engine of Jenkins X might seem like an implementation detail, in fact, it has a big impact on the developers. Everybody is used to see the Jenkins UI as the CI/CD UI – the main entry point, the way to manually restart pipelines executions, to access logs and test results. Sure, there is a new UI and a real API with an awesome CLI, but the new UI is not finished yet, and some people still prefer to use web browsers and terminals. Leaving the Jenkins Plugins ecosystem is also a difficult decision because some projects heavily rely on a few plugins. And finally, with the introduction of Prow (Lighthouse) the Github workflow is a bit different, with Pull Requests merges being done automatically, instead of people manually merging when all the reviews and automated checks are green.

So if 2019 was the year of Jenkins X at Dailymotion, 2020 will definitely be the year of Tekton: our main release platform – used by almost all our projects except the ad-tech ones – is still powered by Jenkins, and we feel more and more its limitations in a Kubernetes world. This is why we plan to replace all our Jenkins instances with Tekton, which was truly built for Kubernetes and will enable us to scale our Continuous Delivery practices.

Originally posted in the Jenkins X blog by Rob Davies

The Jenkins X project started the beginning of 2019 by celebrating its first birthday on the 14th January, a big event for any open source project, and we have just celebrated our 2nd – hooray!

image by Ashley McNamara, creative commons license

Jenkins X has evolved from a vision of how CI/CD could be reimagined in a cloud native world, to a fast-moving, innovative, rapidly maturing open source project.

Jenkins X was created to help developers ship code fast on Kubernetes. From the start, Jenkins X has focused on improving the developer experience. Using one command line tool, developers can create a Kubernetes cluster, deploy a pipeline, create an app, create a GitHub repository, push the app to the GitHub repository, open a Pull Request, build a container, run that container in Kubernetes, merge to production. To do this, Jenkins X automates the installation and configuration of a whole bunch of best in breed open source tools, and automates the generation of all the pipelines. Jenkins X also automates the promotion of an application through testing, staging, and production environments, enabling lots of feedback on proposed changes. For example, Jenkins X preview environments allow for fast and early feedback as developers can review actual functionality in an automatically provisioned environment. We’ve found that preview environments, created automatically inside the pipelines created by Jenkins X, are very popular with developers, as they can view changes before they are merged to master.

Jenkins X is opinionated, yet easily extensible. Built to enable DevOps best practices, Jenkins X is designed to the deployment of large numbers of distributed microservices in a repeatable and manageable fashion, across multiple teams. Jenkins X facilitates proven best practices like trunk based development and GitOps. To get you up and running quickly, Jenkins X comes with lots of example quickstarts.

In the second half of 2018, Jenkins X embarked on a journey to provide a Serverless Jenkins and run a pipeline engine only when required. That pipeline engine was based on the knative build-pipeline project which evolved into Tekton with much help and love from both the Jenkins and Jenkins X communities. The Jenkins X project completed its initial integration with Tekton in February 2019. Tekton is a powerful and flexible kubernetes-native open source framework for creating CI/CD pipelines, managing artifacts and progressive deployments.

Jenkins X joined the Continuous Delivery Foundation (CDF) as a founding project alongside Jenkins, Spinnaker, and Tekton. Joining a vendor-neutral foundation, focused on Continuous Delivery, made a lot of sense to the Jenkins X community. There had already been a high level of collaboration with the Jenkins and Tekton communities, and there have been some very interesting and fruitful (in terms of ideas) discussions about how to work better with the Spinnaker communities also.

When Jenkins X embarked on its serverless jenkins journey, it chose to use Prow, an event handler for GitHub events and ChatOps. Prow is used by the Kubernetes project for building all of its repos and includes a powerful webhook event handler. Prow is well proven, but heavily tied to GitHub, and not easily extendable to other SCM providers. At the end of June 2019, work commenced on a lightweight, extensible alternative to Prow, called Lighthouse. Lighthouse supports the same plugins as Prow (so you can still ask via ChatOps for cats and dogs) and the same config – making migration between Prow and Lighthouse extremely easy.

We were very busy in June – a frantic burst of activity before summer vacations! One common problem Jenkins X users were facing was the installation of Jenkins X on different Kubernetes clusters. Installing services, ensuring DNS and secrets are correct, and done in the right order is completely different from vendor to vendor, and sometimes cluster to cluster. We realised that to simplify the install, we really needed a pipeline, and whilst this may sound a little like the plot to a film, running a Jenkins X pipeline to install jx really is the best option. The jx boot command interprets the boot pipeline using your local jx binary. The jx boot command can also be used for updating your cluster.

As part of the move to the CDF the Jenkins X project took the opportunity to redesign its logo. An automaton represents the ability of Jenkins X to provide automated CI/CD on Kubernetes and more!

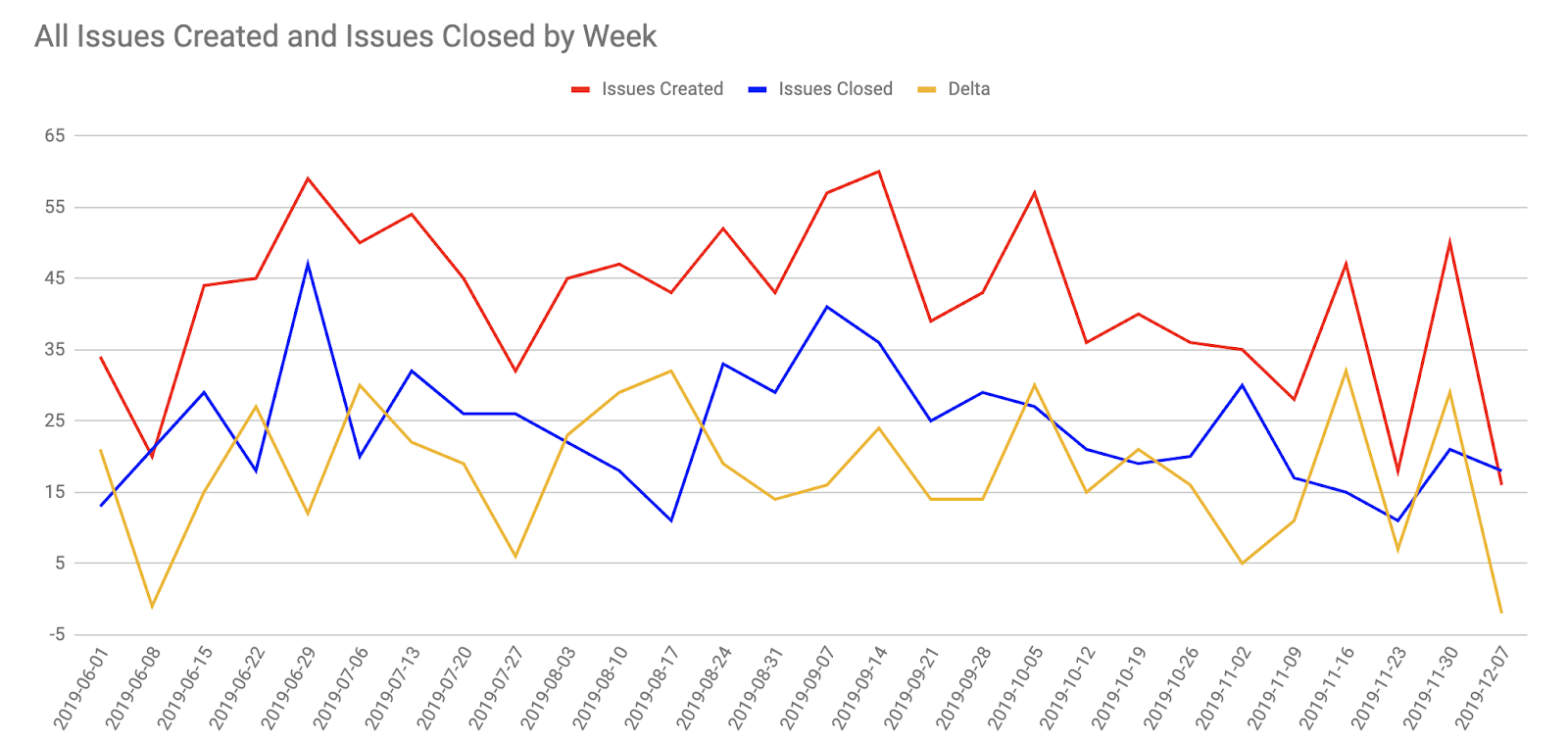

The Jenkins X project has been fast paced with lots of different components and moving parts. This fast pace unfortunately led to some instability and a growth of serious issues that risked undermining all the great work there had been on Jenkins X. There has been a concerted effort by the community to increase stability and reduce outstanding issues – the graph below shows the trend over the last year, with a notable downward trend in the number of issues being created in the last 6 months.

CloudBees also aided this effort by introducing the CloudBees Jenkins X Distribution with increased testing around stabilized configurations and deployments and regular releases every month.

The Jenkins X Bootstrap Steering Committee is tasked with organising the transition to an elected steering committee, as well as determining what responsibilities the steering committee will have in guiding the Jenkins X project.

Neha Gupta is adding support for Kustomize in Jenkins X, to enable Kubernetes native configuration management, while participating in Outreachy from December 2019 to March 2020. We welcome Neha’s work on Jenkins X and look forward to building on our culture of continuous mentoring!

The easiest way to try out Jenkins X is undoubtedly through CloudBees CI/CD powered by Jenkins X which provides Jenkins X through the convenience and ease of use of SaaS. No cluster setup, no Jenkins X install, that is all done for you! Currently, CloudBees CI/CD powered by Jenkins X is available for preview. Sign up here to try out the new Jenkins X Saas!

The Jenkins X project is going to be encouraging the community to get involved with more innovation. There are a lot of great ideas to extend the continuous story with integrated progressive delivery (A/B testing, Canary and Blue/Green deployments) and Continuous Verification, alongside more platforms support. We are expecting lots of awesome new features in the CloudBees UI for Jenkins X too.

Expect lots more exciting new announcements from Jenkins X in 2020!

Author: Dan Lorenc (dlorenc@google.com, twitter.com/lorenc_dan, github.com/dlorenc)

Last year at Kubecon Seattle, Tekton was just an idea in the heads of a few people, and a bit of code inside knative/build-pipeline. Fast forward to today, and we have a thriving community, independent governance inside a great foundation, and we’re quickly approaching our beta release! This year has flown by, so I wanted to highlight some of the original goals of the Tekton project, and some of the milestones we’ve hit toward them.

When Christie, Jason and I started sketching out the original Tekton APIs on a whiteboard in San Francisco, we had a straightforward goal in mind: make the hard parts of building CI/CD systems easy. There are already dozens of CI/CD systems designed to run on kubernetes, and for the most part they all have to build the same basic infrastructure before they can start solving customer problems. Kubernetes makes scheduling, orchestration and infrastructure management easier than anyone could have imagined, but it still leaves users with a few pieces to assemble before they can use it as an application delivery platform.

Things like basic DAG orchestration, artifact management, and even reliable log storage are outside the bounds of the core Kubernetes APIs. Our plan was to use the new Custom Resource Definition feature to try to define a few more “nouns”, on top of the existing Kubernetes primitives, that were better suited for Continuous Delivery workloads. In doing so, we would make it easier for people and trans to create delivery systems designed for their exact use cases, while making sure the underlying primitives allowed for some degree of compatibility.

Vague ideas are great, but it’s much more productive to collaborate with others when you have something concrete to share and get feedback on. So in August of 2018, we released the sketch and principles we used to design it on knative-dev and to a few other interested parties. The feedback was amazing. The core pipeline team on Jenkins at Cloudbees jumped in with some hard earned lessons from their experience working on the most widely used orchestration system in history. The Concourse team at Pivotal helped redesign our extensibility system based on what they learned from the successful Concourse resource model.

Then we got to work building it all out! Our goal must have really resonated with others, because we could really feel the power of open source from day one. Even when there were only a few of us working together at Google, we were part of a much larger effort. We really wouldn’t be here today without the help of our contributors, maintainers and governing committee members.

Around the time we prepared for our first release, it started to become obvious that Tekton (then called knative/build-pipeline) needed its own home. The knative brand and community helped immensely, but Tekton was meant to provide CI/CD for everyone – not just serverless or even kubernetes users. So in February of 2019, we decided to split out the project, name it “Tekton” and donate it to the newly-forming Continuous Delivery Foundation just in time for the 0.1.0 release.

Kim Lewandowski announcing Tekton at the Open Source Leadership Summit

Open source, governance, and communities are hard. The move out of knative and into a new foundation was a big change for the project, but has proven worth the effort! Tekton still works great with other knative components, but has had the chance to grow its own community and evolve to a spot that its users need. Thanks to the community, Tekton has expanded into multiple projects like the Dashboard and CLI, and in Tekton Pipelines we have been so lucky to gain the expertise of folks like Vincent Demeester and Andrea Frittoli.

The rest of this year has felt like a blur, but I wanted to call out some major milestones we hit:

We’re rapidly approaching the first Tekton beta release! As part of this effort, we evaluated our API surface and identified quite a few areas that need hardening. This includes finishing up the table-stakes requirements for a CI/CD platform – things like triggers, metadata storage and building up our catalog. The Triggers v0.1.0 release has made Tekton usable in so many new ways, and we’re just getting started there still!

Scott Seaward has has just started work on refactoring PipelineResources into an extensible system that will form the basis for the Tekton catalog, and Jason Hall is working on a metadata storage system that will help power some new ideas around software supply chain management.

If you’d like to get involved in the Tekton project, you can find us on Github, Slack or our email list . We’ll also be at Kubecon next week! Come attend one of the many sessions or the CD Summit. Here’s to Tekton in 2020!