CDF Newsletter – May 2020 Article

Subscribe to the Newsletter

Tekton is a project that evolved from an internal Google tool that used Knative to build and deploy software. In 2018, it was spun out as an independent project and donated to the Continuous Delivery Foundation.

The core component, Tekton Pipelines, runs as a controller in a Kubernetes cluster. It registers several custom resource definitions which represent the basic Tekton objects with the Kubernetes API server, so the cluster knows to delegate requests containing those objects to Tekton. These primitives are fundamental to the way Tekton works. Tekton’s building block approach starts with the smallest atom of work, the Step, aggregates Steps together in Tasks, and aggregates Tasks together in Pipelines.

If the nomenclature here feels confusing, don’t feel bad — it is complicated! Each tool in the space uses slightly different terms; this is something we’re working on standardizing in the CDF Interoperability SIG. We’d love your input – here’s how to participate! Tekton’s usage of these terms is clarified in the sig-interop Vocabulary definitions doc:

* *Step*: a specific function to perform.

* *Task*: is a collection of sequential steps you would want to run as part of your continuous integration flow. A task will run inside a pod on your cluster.

* *ClusterTask*: Similar to Task, but with a cluster scope.

* *Pipeline*: stateless, reusable, parameterized collection of tasks. Tasks are linked together in a Pipeline, which describes the end-to-end deployment for an application.

* *PipelineRun*: an instantiation of a Pipeline definition, filling in the Pipeline’s parameters with concrete values

* *Pipeline Resource*: objects that will be input to or output from tasks

* *Trigger*: Triggers is a Kubernetes Custom Resource Definition (CRD) controller that allows you to extract information from event payloads (a “trigger”) to create Kubernetes resources.

Notable omissions from the CRD list are “Steps”, which don’t have their own CRD because they’re the smallest unit of execution which are always contained inside a Task. The Conditions and Dashboard Extension CRDs are still optional and experimental — but very exciting!

Tekton’s approach is particularly interesting from a tool interoperability standpoint. By focusing on these building blocks and the concrete representation of them as declarative configuration, Tekton creates a standard platform for CD in the same way that Kubernetes provides a platform for application runtimes. This allows user-facing tools to build on the platform rather than reinventing these primitives. Several projects have already taken up this approach:

* Jenkins X uses Tekton as its execution engine. It’s been an option for a while now, but recently the project announced it was moving to using Tekton exclusively. Jenkins X provides pipeline definitions and gitops workflows that are tailored for cloud-native CD.

* Kabanero is a project that enables teams to develop and deploy applications on Kubernetes, so architects can provide pre-approved application stacks for developers to work from. It uses Tekton Pipelines and several associated projects like Tekton Dashboard and Triggers; indeed the developers building the Dashboard are largely working on Kabanero and the IBM Cloud Devops Pipeline product.

* Relay by Puppet is a hosted service that uses Tekton as the execution engine for event-triggered devops and deployment workflows. (Full disclosure, this is the product I am working on!) It provides a YAML dialect for building workflows that can be triggered by external events, via API, or manually, to automate tasks that need to stitch together different tools and services.

* TriggerMesh have integrated Tekton Pipelines into their TriggerMesh Cloud project and are working on a tool called Aktion to translate Github Actions into Tekton Pipelines.

* There are more, too! Check out the Tekton Friends repo for a longer list of projects and end users building on Tekton.

As exciting as this activity is, I think it’s important to note there’s still a lot of work to be done. There’s a distinct difference between two projects both using Tekton as a common upstream platform and achieving interoperability between them! It’s a big problem and it’s easy to get overwhelmed with the magnitude of the whole thing. One of my earliest lessons when I moved from SRE into product management was: focus first on solving the pain points which end users feel most acutely. That can be some combination of pervasiveness (what percent of the overall user base feels it?) and severity (how bad is each individual incident?) – ideally, fix the thing which is worst on both axes! From an end user’s standpoint, CD tools have a pretty steep learning curve with a bunch of pitfalls. A sampling of these severe-and-pervasive pitfalls I’ve heard from our users as we’ve been building Relay:

* How do I wrap my head around the terminology and technology so I can get started?

* How do I integrate the parts of the build/test/deploy toolchain my organization needs to continue using?

* How do I operate (upgrade, monitor, troubleshoot) the tool once it’s up and running?

Interoperability isn’t a cure-all, but there are definitely areas where it could work like a soothing balm on all of this pain. Industry-standard terminology or at a minimum, an authoritative Rosetta Stone for CD, could help. At the moment, there’s still pockets of debate on whether the “D” stands for Deployment or Delivery! (It’s “Delivery”, folks – when you mean “Deployment” you have to spell it out.)

Going deeper, it’d be hugely helpful help users integrate the tools they’re already using into a new framework. A wide ecosystem of steps that could be used by any of the containerized CD tools – not just those based on Tekton but, for example, Spinnaker and Keptn as well – would have a number of benefits. For end users, it would increase the amount of content available “out of the box”, meaning they would have less work to integrate the tools and services they need. Ideally, no end-user should have to create a step from scratch because there’s a vast, easily discoverable library of things that accomplish the job they have. There’s also a benefit to maintainers of services and tools that end-users want, like Kaniko, Gradle, and the cloud services, who have to build an integration with each execution framework themselves or rely on the community to do it. Building and maintaining one reusable implementation would reduce the maintenance burden and allow them to provide higher quality.

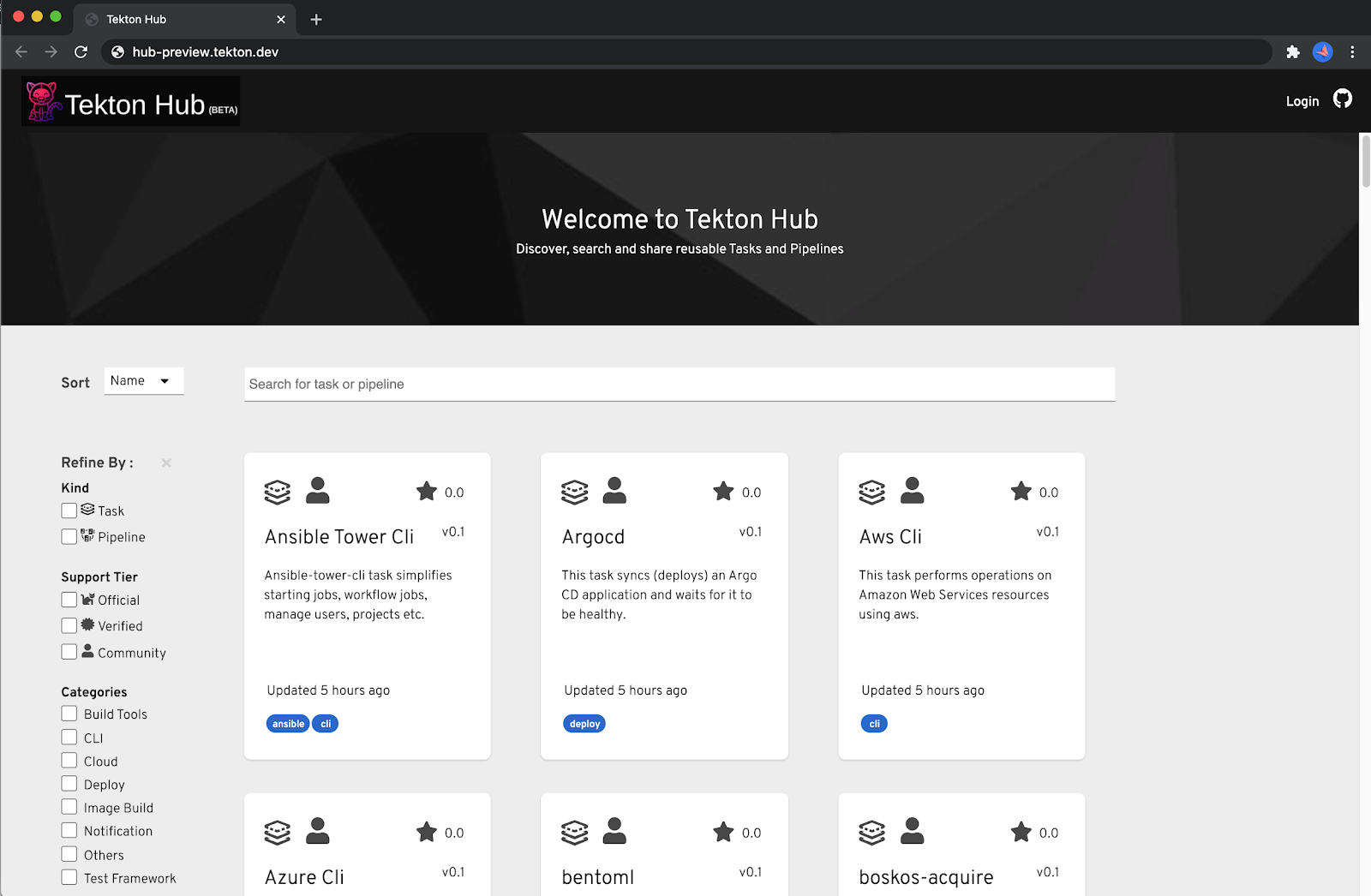

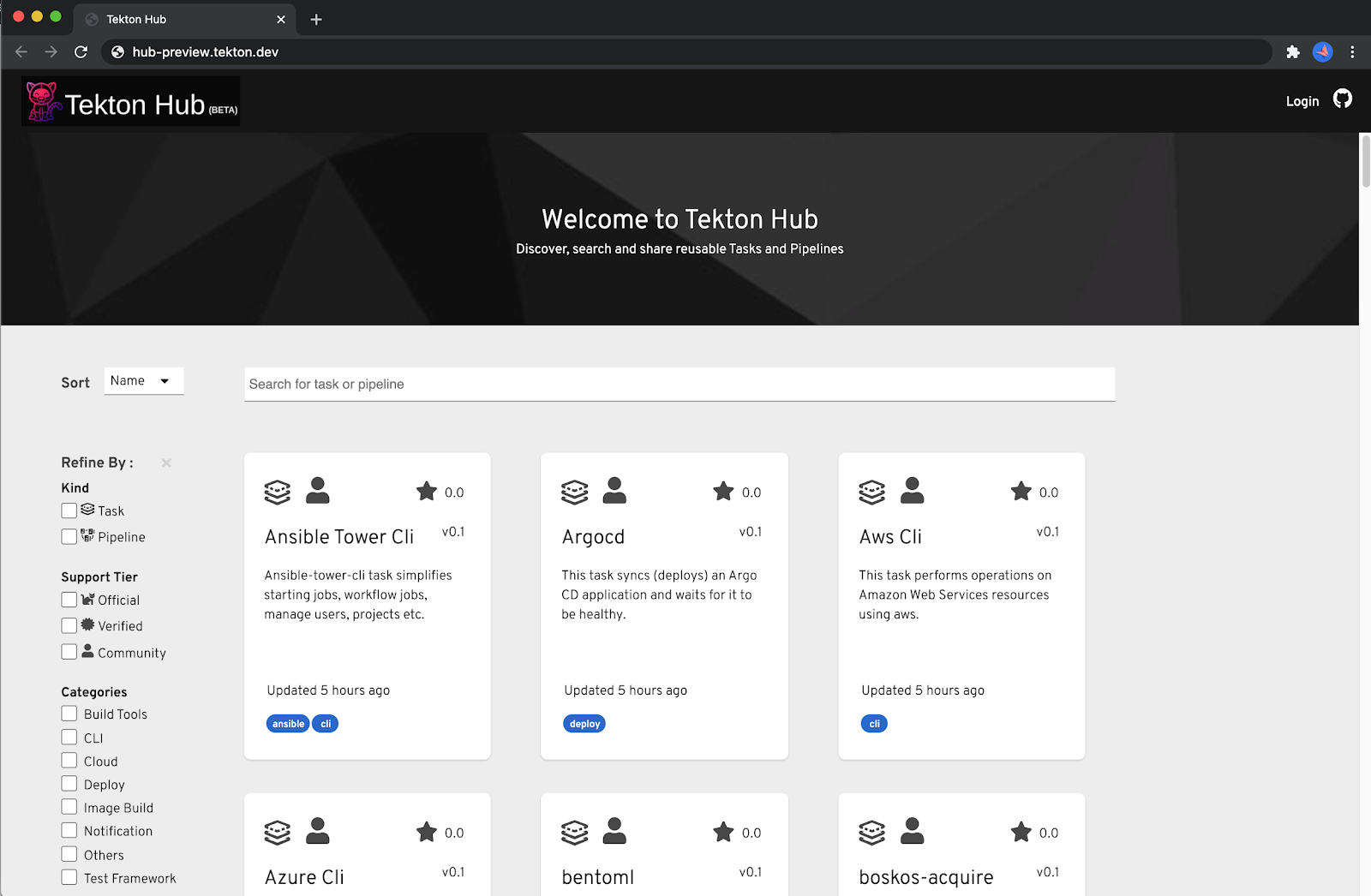

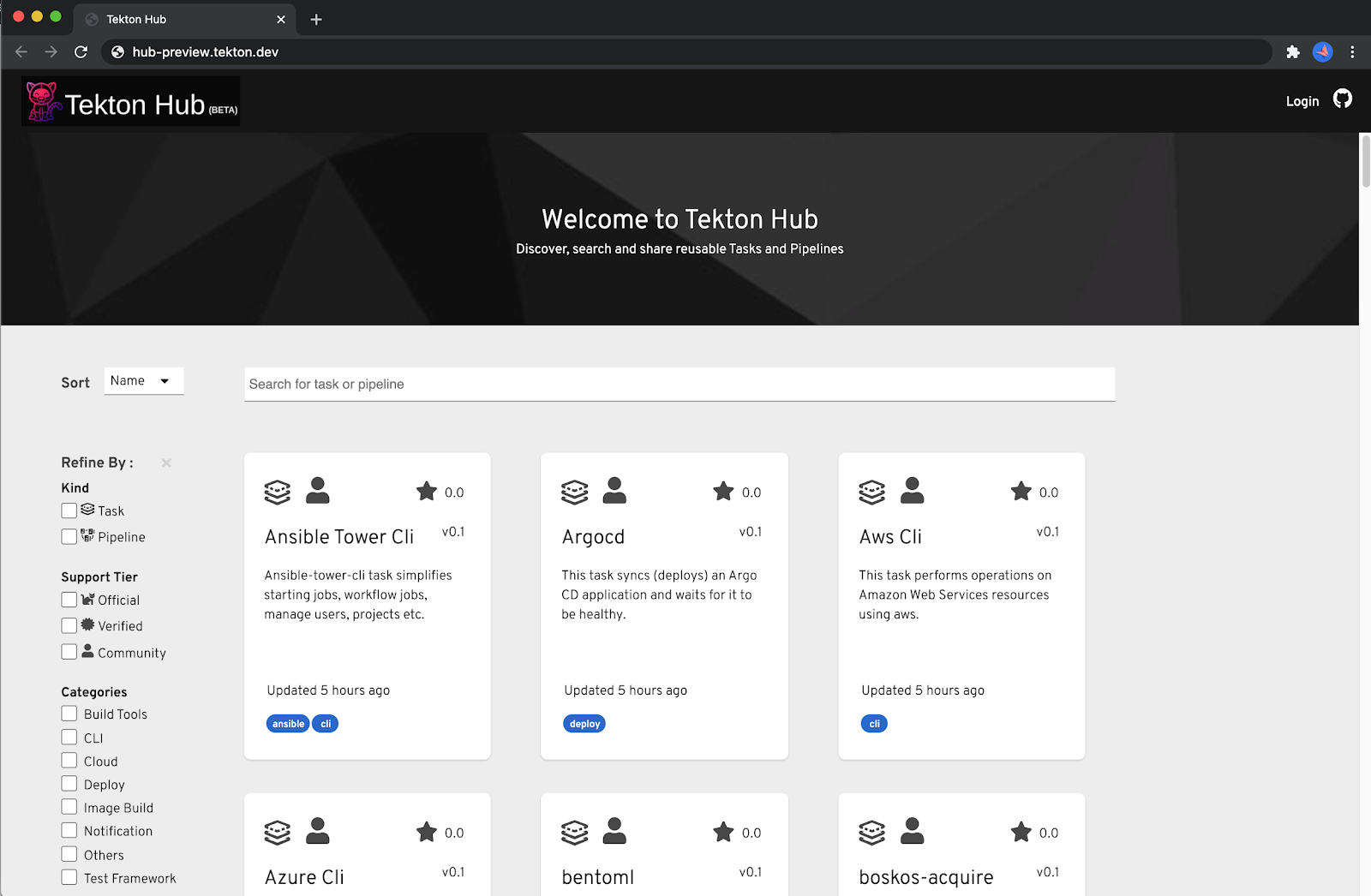

To put on my Tekton advocate hat for a moment, its well-defined container contract makes it easy to use general-purpose containers in your pipeline. If you want to take advantage of more specialized features the framework provides, the Tekton Catalog has a number of high-quality examples to build from. There are improvements on the way to aid the discoverability and reuse parts of the problem, such as the exciting new Tekton Hub donated by Red Hat.

The operability concerns are a real problem for CD pipeline tools, too. Although CD is usually associated with development, in many organizations the tool itself is considered a production service, because if there are problems committing, building, testing, and shipping code, the engineering organization isn’t delivering value. Troubleshooting byzantine failures in complex CI/CD pipelines is a specialized discipline requiring skills that span Quality Engineering, SRE, and Development. The more resilient the CD tools are architected, and the more standard their interfaces for reporting availability and performance metrics, the easier that troubleshooting becomes.

Again, to address these from Tekton’s perspective, a huge benefit of running on Kubernetes is that the Tekton services that run in the cluster can take advantage of all the powerful k8s operability features. So fundamental capabilities that are highly valuable to operators and troubleshooters like log aggregation, in-place upgrades, error reporting, and scale-out all ride on top of the Kubernetes infrastructure. It’s not “for free” of course; nothing in distributed systems is ever truly “for free” and if anyone tries to tell you otherwise, the thing they’re selling you is probably *very* expensive. But it does mean that general-purpose Kubernetes skills and tooling goes a long way towards operating Tekton at scale, rather than having to relearn or reimplement them at the application layer.

In conclusion, I’m excited that the interoperability conversation is well underway at the CDF. There’s a long way to go, but the amount of activity and progress in the space is very encouraging. If you’re interested in pitching in to discuss and solve these kinds of problems, please feel free to join in #sig-interoperability channel on the CDF slack or check out the contribution information.

You may have heard that Tekton Pipelines is now beta! That’s not beta like the video format but beta like Kubernetes! Okay I’ll stop trying to make jokes, because compatibility is no laughing matter for folks who want to build on top of and use Tekton, and that’s why we’ve declared beta, so that you can feel more confident in using it.

So what does beta mean exactly? It means for Tekton what it means for Kubernetes, and it boils down to two things:

You might be wondering what “the API” means in this context – good question! It’s the specifications of the CRDs themselves and runtime details like the special directories that Tekton makes.

Not all of Tekton is beta however! Right now it’s just Tekton Pipelines and it’s only the following CRDs:

This means that other types that you might like, such as Conditions and PipelineResources (see the next section!) are still alpha and don’t (yet!) have the same beta level guarantees.

You can always refer to our API compatibility docs in our repo if you forget!

What about them indeed! If you are part of the Tekton community, you’ll know that we keep going back and forth on our love/hate-able PipelineResources – the feature you love until it doesn’t work.

A few months ago, our “difficult to understand, hard to debug” friend was challenged by the community: what would the Tekton world look like without PipelineResources? And when we went on that journey, we discovered features which PipelineResources gave us which were super useful on their own:

So we focused on adding those features and brought them to beta. In the meantime, we keep asking the question: do we still need PipelineResources? And what would they look like if redesigned with workspaces and results? We’re still asking those questions and that’s why PipelineResources aren’t beta (yet)!

We know some users really love them: “There are dozens of us,” – @dlorenc. So we haven’t given up on them yet, and there are some things that you just still can’t do well without them: for example, how do you consistently represent artifacts such as images moving through Pipelines? You can’t! So the investigation continues.

In the meantime, we’ve made Task equivalents of some of our PipelineResources in the Tekton catalog, such as PullRequests, GCS, and git.

Hooray! Our shiny new site is live! Right this way -> https://tekton.dev/

Tekton Documentation is now hosted on the website at https://tekton.dev/docs/. And interactive tutorials are hosted at https://tekton.dev/try/. There is just one interactive tutorial hosted right now but more are in process to get published, so watch this space!

We’re hard at work on more nifty Tekton stuff to make your CI/CD Pipelines more powerful and more portable by achieving Tekton’s mission:

Be the industry-standard, cloud-native CI/CD platform components and ecosystem.

Check out more on our mission and our 2020 roadmap in our community repo.

Thanks to all of the many amazing contributors who have gotten us to this point! The list below is people credited in Tekton Pipelines release notes, but for the complete list of everyone contributing to Tekton check out our devstats!

Originally posted to the IBM blog by Jerh O’Connor

With this new type of Continuous Delivery Pipeline, you define your pipeline as code using Tekton resource YAML stored in a Git repository. This lets you version and share your pipeline definitions while allowing you to configure, run, and view pipeline output in the familiar IBM Cloud Continuous Delivery DevOps experience.

Tekton Pipelines is an open source project, born out of Knative Build, that you can use to configure and run continuous integration/continuous delivery (CI/CD) pipelines within a Kubernetes cluster.

Tekton Pipelines are cloud native and run on Kubernetes using custom resource definitions specialized for executing CI/CD workflows. The Tekton Pipelines project is new and evolving and has support and active commitment from leading technology companies, including IBM, Red Hat, Google, and CloudBees.

For a closer look at Tekton, see our video “What is Tekton?”:06:49

What is Tekton?

In addition to the benefits of Tekton, this new capability in IBM Continuous Delivery provides the following unique features:

Getting started with CD Tekton-enabled pipelines requires a little setup. You will need the following:

Once you have these pieces in place, you need to configure the Tekton Pipeline to enable the running of your pipeline.

Click on the Tekton Pipeline tile on your toolchain dashboard to be taken to the configuration screen, where you will see notifications on what remains to be configured to use this new pipeline technology:

Select the required values in the Definitions tab, select your private worker in the Worker tab, and click Save.

You now need to set some trigger mappings via the Triggers tab so you can run the pipeline.

The simplest trigger is a manual trigger where you kick off a run yourself from the dashboard. You can also create Git triggers that fire based on git push and pull events as needed.

CD Tekton-enabled pipelines also provide a means of externalising reusable properties and provide a simple, secure method for storing sensitive information like apikeys via the Environment Properties tab.

Once the setup has been completed, the new Tekton Dashboard automatically updates to show information on in-flight and completed Pipeline Runs, showing the status of these runs and allowing the cancellation and deletion of runs.

In all cases, you can dig deeper into the logs and see the output of each Tekton Task and Step in the selected Pipeline run.

Caution: The Tekton Pipelines project is still in alpha and being actively developed. It is very likely that you will need to make changes to your pipeline definitions as the project continues to evolve

Watch this short companion video for a demo of using our sample Tekton pipeline in IBM Cloud.

Now you can try it out yourself. For more information, see our documentation. If you have questions, engage our team via Slack by registering here and join the discussion in the #ask-your-question channel on our public Cloud DevOps @ IBM Slack.

We’d love to hear your feedback.

Learn more about Tekton by reading “Tekton: A Modern Approach to Continuous Delivery.”

Tekton Pipelines has shifted into beta, meaning the open source CD project is now looking for more contributors and testers.

Tekton Pipeline is the core component of the Tekton project, which is overseen by the Continuous Delivery Foundation, and is pitched to “configure and run continuous integration/continuous delivery (CI/CD) pipelines within a Kubernetes cluster.” It originated in Knative Build.

The project team said the beta means “most Tekton Pipelines CRDs (Custom Resource Definition) are now at beta level. This means overall beta level stability can be relied on.” However, other components, including Tekton Triggers, Dashboard, Pipelines CLI and more, “are still alpha and may continue to evolve from release to release”.

The team overseeing the development of the open source Tekton Pipelines under the auspices of the Continuous Delivery (CD) Foundation announced today the project is now in beta.

Christie Wilson, Tekton Project Lead and a software engineer at Google, said Tekton Pipelines are not necessarily a tool most DevOps teams will interact with directly. Rather they provide a foundation on which DevOps platforms can be built that will make it easier for DevOps teams to construct workflows spanning multiple continuous integration/continuous delivery (CI/CD) platforms.

As such, Tekton Pipelines should play a critical role in not just fostering interoperability but also alleviating concerns about become locked into a specific CI/CD platform.

Tekton Pipelines, the major component in an open-source project for CI/CD (continuous integration and continuous delivery) on Kubernetes, has reached the milestone of beta status.

Tekton was originally Knative Build, what was then one of three major components in the Knative project, the others being serving and eventing. In June 2019, Knative Build was deprecated in favour of Tekton Pipelines. A Tekton pipeline runs tasks, where each task consists of steps running on a container in a Kubernetes pod.

Tekton Pipelines, the core component of the Tekton project, is moving to beta status with the release of v0.11.0 this week. Tekton is an open source project creating a cloud-native framework you can use to configure and run continuous integration and continuous delivery (CI/CD) pipelines within a Kubernetes cluster.

Tekton development began as Knative Build before becoming a founding project of the CD Foundation under the Linux Foundation last year.

The Tekton project follows the Kubernetes deprecation policies. With Tekton Pipelines upgrading to beta, most Tekton Pipelines CRDs (Custom Resource Definition) are now at beta level. This means overall beta level stability can be relied on. Please note, Tekton Triggers, Tekton Dashboard, Tekton Pipelines CLI and other components are still alpha and may continue to evolve from release to release.

Tekton encourages all Tekton projects and users to migrate their integrations to the new apiVersion. Users of Tekton can see the migration guide on how to migrate from v1alpha1 to v1beta1.

Tekton is in its second year of development and is currently being used by both free and commercial offerings by multiple companies.

Now is a great time to contribute. There are many areas where you can jump in. For example, the Tekton Task Catalog allows you to share and reuse the components that make up your Pipeline. You can set a Cluster scope, and make tasks available to all users in a namespace, or you can set a Namespace scope, and make it usable only within a specific namespace.

From Dailymotion, a French video-sharing technology platform with over 300 million unique monthly users

At Dailymotion, we are hosting and delivering premium video content to users all around the world. We are building a large variety of software to power this service, from our player or website to our GraphQL API or ad-tech platform. Continuous Delivery is a central practice in our organization, allowing us to push new features quickly and in an iterative way.

We are early adopters of Kubernetes: we built our own hybrid platform, hosted both on-premise and on the cloud. And we heavily rely on Jenkins to power our “release platform”, which is responsible for building, testing, packaging and deploying all our software. Because we have hundreds of repositories, we are using Jenkins Shared Libraries to keep our pipeline files as small as possible. It is an important feature for us, ensuring both a low maintenance cost and a homogeneous experience for all developers – even though they are working on projects using different technology stacks. We even built Gazr, a convention for writing Makefiles with standard targets, which is the foundation for our Jenkins Pipelines.

In 2018, we migrated our ad-tech product to Kubernetes and took the opportunity to set up a Jenkins instance in our new cluster – or better yet move to a “cloud-native” alternative. Jenkins X was released just a few months before, and it seemed like a perfect match for us:

While adopting Jenkins X we discovered that it is first a set of good practices derived from the best performing teams, and then a set of tools to implement these practices. If you try to adopt the tools without understanding the practices, you risk fighting against the tool because it won’t fit your practices. So you should start with the practices. Jenkins X is built on top of the practices described in the Accelerate book, such as micro-services and loosely-coupled architecture, trunk-based development, feature flags, backward compatibility, continuous integration, frequent and automated releases, continuous delivery, Gitops, … Understanding these practices and their benefits is the first step. After that, you will see the limitations of your current workflow and tools. This is when you can introduce Jenkins X, its workflow and set of tools.

We’ve been using Jenkins X since the beginning of 2019 to handle all the build and delivery of our ad-tech platform, with great benefits. The main one being the improved velocity: we used to release and deploy every two weeks, at the end of each sprint. Following the adoption of Jenkins X and its set of practices, we’re now releasing between 10 and 15 times per day and deploying to production between 5 and 10 times per day. According to the State of DevOps Report for 2019, our ad-tech team jumped from the medium performers’ group to somewhere between the high and elite performers’ groups.

But these benefits did not come for free. Adopting Jenkins X early meant that we had to customize it to bypass its initial limitations, such as the ability to deploy to multiple clusters. We’ve detailed our work in a recent blog post, and we received the “Most Innovative Jenkins X Implementation” Jenkins Community Award in 2019 for it. It’s important to note that most of the issues we found have been fixed or are being fixed. The Jenkins X team has been listening to the community feedback and is really focused on improving their product. The new Jenkins X Labs is a good example.

As our usage of Jenkins X grows, we’re hitting more and more the limits of the single Jenkins instance deployed as part of Jenkins X. In a platform where every component has been developed with a cloud-native mindset, Jenkins is the only one that has been forced into an environment for which it was not built. It is still a single point of failure, with a much higher maintenance cost than the other components – mainly due to the various plugins.

In 2019, the Jenkins X team started to replace Jenkins with a combination of Prow and Tekton. Prow (or Lighthouse) is the component which handles the incoming webhook events from GitHub, and what Jenkins X calls the “ChatOps”: all the interactions between GitHub and the CI/CD platform. Tekton is a pipeline execution engine. It is a cloud-native project built on top of Kubernetes, fully leveraging the API and capabilities of this platform. No single point of failure, no plugins compatibility nightmare – yet.

Since the beginning of 2020, we’ve started an internal project to upgrade our Jenkins X setup – by introducing Prow and Tekton. We saw immediate benefits:

While replacing the pipeline engine of Jenkins X might seem like an implementation detail, in fact, it has a big impact on the developers. Everybody is used to see the Jenkins UI as the CI/CD UI – the main entry point, the way to manually restart pipelines executions, to access logs and test results. Sure, there is a new UI and a real API with an awesome CLI, but the new UI is not finished yet, and some people still prefer to use web browsers and terminals. Leaving the Jenkins Plugins ecosystem is also a difficult decision because some projects heavily rely on a few plugins. And finally, with the introduction of Prow (Lighthouse) the Github workflow is a bit different, with Pull Requests merges being done automatically, instead of people manually merging when all the reviews and automated checks are green.

So if 2019 was the year of Jenkins X at Dailymotion, 2020 will definitely be the year of Tekton: our main release platform – used by almost all our projects except the ad-tech ones – is still powered by Jenkins, and we feel more and more its limitations in a Kubernetes world. This is why we plan to replace all our Jenkins instances with Tekton, which was truly built for Kubernetes and will enable us to scale our Continuous Delivery practices.

This blog post has been written by the owners of the different projects, and in particular, huge thanks to Christie Wilson, Andrea Frittoli, Adam Roberts and Vincent Demeester!

At the end of last year Dan wrote the blog post: A Year of Tekton. It was a great retrospective on what happened since the bootstrap of the project; a highly recommended read! Now that we’re getting into the swing of 2020, let’s reflect again back on 2019 and look forward to what we can expect for Tekton this year!

We can safely say 2019 (more or less the project’s first year!) was a great year for Tekton. Just like a toddler we tried things, sometimes failed and learned a lot; we are growing fast!

The year 2019 saw 9 releases of Tekton Pipelines, from the first one (0.1.0) to the latest (0.9.2). We shared the work of creating the releases as much as possible, though many contributors are behind the work in each!!

If you are curious about the naming of the release starting from 0.3.x, we decide to spice things up a bit and name our release with a composition of a cat breed and a robot name (in reference to our amazing logo, a robot cat).

Aside from the initial project (tektoncd/pipeline), we bootstrapped a bunch of new projects:

We’ve come a long way but we’ve got more to do! Though we can’t predict what will happen for sure, here is a preview of what we’d like to make happen in 2020!

As you’ve seen, we’ve made a lot of changes! Going forward we want to make sure folks who are using and building on top of Tekton can have more stability guarantees. With that in mind, we are pushing for Tekton Pipelines to have a beta release early in 2020. If you are interested in following along with your progress, please join the beta working group! Or keep an eye on our Twitter for the big announcement.

Once we’ve announced beta, users should be able to expect increased stability as we’ll be taking our lead from kubernetes and mirroring their deprecation policy, for example any breaking changes will need to be rolled out across 9 months or 3 releases (whichever is longer).

And once we get to beta, why stop there? We’d love to be able to offer users GA stability as soon as we possibly can. After we get to beta, we’ll be looking to progress the types that we didn’t promote to beta (e.g. Conditions), add any important features we don’t yet have (we’re looking at you on failure handling and “pause and resume” aka “the feature that enables manual approval”!), and then we should be ready to announce GA!

Speaking of types that won’t be going beta right away: PipelineResources! PipelineResources are a type in Tekton that is meant to encapsulate and type data as it moves through your Pipelines, e.g. an image you are building and deploying, or a git commit you’re checking out and building from.

This concept was introduced early on in Tekton and bares a close resemblance to Concourse resources. However as we started trying to add more features to them, we started discovering some interesting edges to the way we had implemented them that caused us to step back and give them a re-think. Plus, some folks in our community asked the classic question “why PipelineResources” and we found our answer wasn’t as clear as we’d like!

As we started down the path of re-designing, and re-re-designing again, we started to get some clarity on what exactly it was we were trying to create: the interface between Tasks in a Pipeline! And thanks to a revolutionary request to improve our support for volumes, we finally feel we are on the right path! The next steps along this path are to add a few key features, namely the concept of workspaces (i.e. files a Task operates on) and allow Tasks to output values (aka “results”).

Once we have these in place we’ll revisit our designs and our re-designs.

Hand in hand with our beta plans, we’re revamping our website! Soon at tekton.dev you’ll be able to find introductory material, tutorials, and versioned docs.

Besides making it easy for folks to implement cloud native CI/CD, one of the most important goals of Tekton is for folks to be able to share and reuse the components that make up your Pipeline. For example, say you want to update Slack with the results of a Task – wouldn’t it be great if there were one battle tested way to do that, with a clean interface?

That’s what the Tekton catalog is all about! To date we’ve received more than 20 Tasks from the community to do everything from running Argo CD to testing your configuration with conftest.

But there’s so much more we want to do! We want to offer versioning and test guarantees that can make it painless for folks to depend on Tasks in the Catalog – and for companies to create Catalogs of their own.

Plus, the Catalog is a great place for us to build better interoperability even between the Tekton projects, for example with the Task that runs tkn (the Tekton CLI).

A community is nothing without its users, contributors, adopters and friends, so we want to explicitly shout outs to our community for their tremendous effort and support in 2019 and hopefully even more in 2020.

We’ve gained friends and more are always welcome! Our current list of “known friends” includes:

We welcome friend requests! Please submit a PR to https://github.com/tektoncd/friends, this repository acts as a place that allows members of the ecosystem (known as “Tekton Friends”) to self-report in a way that is beneficial to everyone. We’d love to have you as a friend if your company is using Tekton and/or contributing to it

Adoption of Tekton has grown and became a part of both free and commercial offerings by various companies, demonstrating that Tekton’s valuable and ready for anything

In mid-2019, Puppet launched a new cloud-native CD service called Project Nebula that’s built on Tekton Pipelines. It provides a friendly YAML workflow syntax and niceties like secrets management and a spiffy GUI on top of Tekton instance running in GCP. To coincide with the public beta of Nebula, Scott Seaward keynoted at the Puppetize PDX user conference to talk about how Tekton works under the hood. Since then, the Nebula team has contributed several PRs to the Pipelines repo and are looking forward to working on step interoperability, triggers, and other awesome upstream features in 2020.

Other notable examples include:

It has been such a privilege to see more and more people get excited about Tekton and share it with the world! Here are some (but not all!!) of the great talks and tweets we saw about Tekton in 2020, not to mention our Tekton contributor summit!

Huge shoutouts to all of the tektoncd projects contributors

If you are even more curious on the contributions happening in the tektoncd project, you can visit the tekton.devstats.cd.foundation site (e.g. a page showing the overall contributors on all tektoncd projects).

If you are interested in contributing to Tekton, we’d love to have you join us! Every tektoncd project has a CONTRIBUTING.md that can point you in the right direction, and our community contains helpful links and guidelines. Feel free to open issues, join slack, or pop into one of our working groups! Hope to see you soon