Continuous Delivery Foundation (CDF) Technical Oversight Committee (TOC) approved the formation of Special Interest Group (SIG) Interoperability January 14, 2020. SIG Interoperability aims to increase integration and interoperability across different tools and technologies in the open source CI/CD ecosystem. One of the prerequisites to achieve this is to provide a neutral forum, enabling dialog between projects and end-users so they can come together and discuss their use cases, needs, and challenges. This will allow projects and communities to explore additional collaboration opportunities and increase the visibility of ongoing work.

One of the means the SIG adapted to provide a forum for discussion is to invite representatives of project and end-user communities to regular SIG meetings so they can present what they are doing. The presentations are then followed by open discussions which allows community members to ask questions, raise concerns, and more importantly start talking with each other. However, one of the things the community noticed is the lack of shared terminology and vocabulary as the tools and technologies employ different terms to describe what is often the same thing.

This is actually not a surprising finding since there are many ways to greet someone and as humans if we do not understand the word being used we have the ability to observe body language, process tone, and even touch. These many different natural inputs allow us as humans to establish shared vocabulary upon which we have been able to build successful components relevant to our way of living and social norms of interacting.

Unfortunately for machines, this process is not so easy as we humans have to decide if we want to establish norms which we often surface when talking about machine interactions as protocols and best practices or requirements.

Continuous Integration (CI) and Continuous Delivery (CD) practitioners have many tools at their disposal but it is often the case that what we call a pipeline in today’s tool of choice is not called the same thing in the tool we use tomorrow. Again, we can within our sphere of influence and interaction adjust for these nuances but machines talking to one another do not have that same luxury necessarily.

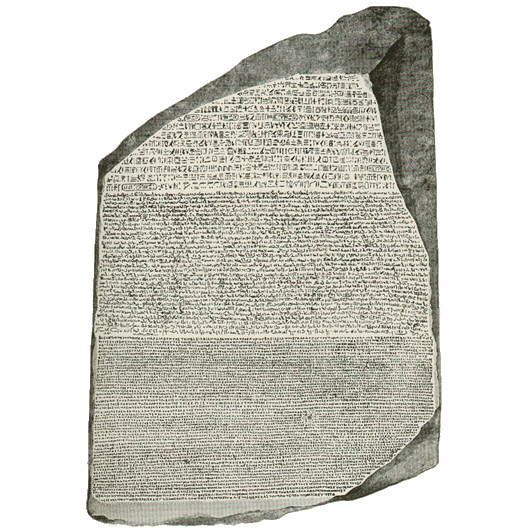

These are the thoughts that made contributors to SIG to work on vocabulary and terminology as the first thing right after the SIG was approved to be formed because we believe that if we can establish a shared vocabulary across the industry in CI/CD domain, we can remove the barriers between humans so we can start tackling with getting machines to talk to each other. The way this work is envisioned to be done is to collect the existing terms used by CI/CD tools and technologies in a document, and create a mapping of the terms across projects, essentially making the Rosetta Stone for CI/CD domain. We think that we can continue on this work and look for possibilities to come up with shared vocabulary in a collaborative manner.

The document SIG is working on is available in SIG Interoperability repository on GitHub and it currently contains terms for 10 CI/CD projects as shown on the table below.

| Project | ||||||

| CircleCI | N/A | Step | Job | Workflow | Trigger | Executor |

| GitHub Actions | Action | Step | Job | Workflow | Event | Runner |

| GitLab CI/CD | N/A | Job | Stage | Pipeline | Trigger | Runner |

| Jenkins | N/A | Job | Stage | Pipeline | Trigger | Agent/Node |

| Jenkins X | N/A | Step | Stage | Pipeline | Trigger | Agent |

| Keptn | N/A | N/A | Task | Workflow | Event | Keptn-Service |

| Screwdriver | N/A | Step | Job | Pipeline | Trigger | N/A |

| Spinnaker | N/A | Task | Stage | Pipeline | Trigger | Cluster |

| Tekton | N/A | Step | Task | Pipeline | Trigger | Resource |

| Zuul | N/A | N/A | Job | Pipeline | Trigger | Node |

Due to the fact that when organizations establish CI/CD pipelines, they employ not just CI/CD tools but also Software Configuration Management (SCM) systems, Artifact Repository Managers (ARM) and so on. That’s why we included terminology for SCM tools such as Gerrit, GitHub, and GitLab and we expect to have terms used by other tools in adjacent areas collected as well.

It is important to highlight that we consider this work as still ongoing and we encourage and welcome everyone to add terminology used by the project they use and/or are involved in to the document so we have broader coverage of the tools and technologies. If you also notice that there are things that can be improved, feel free to send a pull request to CDF SIG Interoperability repository and improve the existing documentation.

CDF Newsletter – May 2020 Article

Subscribe to the Newsletter

Jenkins X is an automated CI/CD platform built on Kubernetes. Jenkins X enables users to harness the power of Kubernetes without needing to be Kubernetes experts. How does a CI/CD platform do this? Jenkins X forms an abstraction layer over Kubernetes, simplifying the developer experience of building, deploying, and running Kubernetes applications. Under the hood, Jenkins X combines best-of-breed open source tools, creating a Kubernetes-native CI/CD platform that facilitates developer and GitOps best practices.

In this post, we’ll look at how Jenkins X uses Kubernetes Custom Resource Definitions (CRDs) and the Kubernetes API to bring together these best-of-breed open source projects, creating a cutting edge continuous delivery platform on Kubernetes. We’ll highlight two Kubernetes design principles that help us understand how Jenkins X natively extends Kubernetes:

Kubernetes itself is decomposed into multiple components which interact through the Kubernetes API. Kubernetes’ declarative, API driven infrastructure enables it to be composable and extensible.

The Kubernetes API is declarative rather than imperative: as a user, you declare the desired state of your application and the Kubernetes system drives to make it so. One important benefit of this is automatic recovery. If something happens to your application, for example, a node crashes, then Kubernetes will restore the desired state.

The Kubernetes API is exposed by the Kubernetes API server, which is a component of the Kubernetes control plane. The Kubernetes control plane is transparent in that there are no hidden internal APIs in Kubernetes: Kubernetes components interact through the same API that Kubernetes exposes to its users.

Kubernetes’ declarative, API driven infrastructure means that components, such as nodes, talk to the Kubernetes API server to figure out what their state ought to be. Instead of having the decision centralised and sent out, each node is responsible for its own health, and figuring out its desired behaviour. If a node fails and is brought back up, the newly created node can query the API server to figure out what it’s supposed to do.

The declarative way the Kubernetes API server communicates with remote nodes is in contrast to traditional client – server relationships, where the client tells the server what to do in an imperative manner and the server does it. Building the Kubernetes API server this way would have meant it grew as more functionality was added; the API server would have been brittle and difficult to extend.

Kubernetes is using a pattern called level triggered, which is generally opposed to edge triggered. In edge triggered systems the system responds to events, but if the system doesn’t receive an event, then the event needs to be replayed for the system to recover.

“If you are edge triggered you run risk of compromising your state and never being able to re-create the state. If you are level triggered the pattern is very forgiving, and allows room for components not behaving as they should to be rectified. This is what makes Kubernetes work so well.”

– Joe Beda, as quoted in Cloud Native Infrastructure, by Justin Garrison and Kris Nova

In Kubernetes, if any component goes down, when it comes back up, it requests the desired state from the Kubernetes API server and works to match that state. Components that can recover in this way tend to be more robust and the overall system is more reliable. This is especially true in distributed systems, where there are so many components in the system that the expectation is that there will always be components failing. Distributed systems need to be designed to tolerate the failure of components. If your system has one central manager component, which tells all the parts of the system what they should be doing, and that central manager component goes down, your system is down. Distributing that responsibility, so every component can figure out what it should be doing, makes the system more reliable. No longer is there a single point of failure.

What happens when the Kubernetes API server, which acts as a central point, goes down? All the components will continue to operate on the last information they received. When the API server comes back up, the components will then operate on the new state if there were any changes. If any of the components go down, the other components can continue to function independently of that failure. When failed components come back up, they can read the state they should work towards from the API server.

These design choices make Kubernetes reliable. They also make Kubernetes very composable and extensible. Because all components use the same Kubernetes API as you do as an end user, you can replace any default component with your own. You can also add new components to enable new functionality. This extensibility has helped create a vibrant ecosystem of Kubernetes-native open source projects that like Jenkins X are built on Kubernetes using Kubernetes resources and the Kubernetes API machinery.

Kubernetes is extended through Custom Resource Definitions (CRDs). A Kubernetes resource is an endpoint in the Kubernetes API that stores API objects of a certain type. Kubernetes uses API objects to represent the state of your cluster.

To create your own custom Kubernetes API object type, define a new CRD of your type and define the schema. Then you can create your own objects against the Kubernetes API server. In this way, a custom resource extends the Kubernetes API: creating CRDs is like embedding your own APIs inside Kubernetes itself. To use the custom API objects you have created, you write your own custom controllers that act on the data contained in your custom object types. Kubernetes controllers are the mechanism by which Kubernetes reconciles the state state of your cluster to the state declared in the Kubernetes API.

How do CRDs relate to Kubernetes built-in types? Tim Hockin, co-founder of the Kubernetes project, has said, “If we had CRDs on day zero of Kubernetes there would be no built-in types.” If CRDs had existed from the start, pods and nodes and everything else would also be a CRD!

If they weren’t part of the original design, why were CRDs created? CRDs were first created as a way to extend Kubernetes functionality to enable rapid prototyping.

“That’s what fascinates me about CRD. It started as a prototyping tool. K8s API machinery was not intended to be a framework, but that is what shook out. If we did that intentionally we would have messed it up.”

– Tim Hockin, Twitter

It’s extremely interesting that CRDs, which started as a prototyping mechanism, are now the main resource definition mechanism in Kubernetes. This enables Kubernetes to be more modular, and many core Kubernetes functions are now built using custom resources.

The Kubernetes API machinery is now distilled such that it can be used as API machinery for any project, not just Kubernetes. The extensible nature of the Kubernetes API enables higher level applications and platforms to be built on Kubernetes. Jenkins X runs directly on Kubernetes, uses the Kubernetes API, and defines CRDs for its workflow. Moreover, the same Kubernetes API machinery that makes Kubernetes extensible also enables Kubernetes-native applications to integrate well with each other. Jenkins X both creates its own CRDs and integrates with other Kubernetes-native applications through the Kubernetes API to form a Kubernetes-native CI/CD platform.

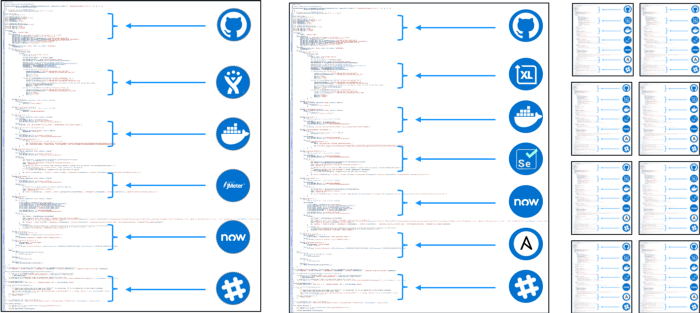

Jenkins X High Level Architecture:

As seen in the diagram above, Jenkins X integrates with a number of open source projects such as Tekton, Prow, and Vault, among others, to create an automated Kubernetes-native CI/CD platform. Jenkins X relies on CRDs to create new resources and extend the Kubernetes API. The Kubernetes API machinery enables Jenkins X to integrate with other open source projects through the Kubernetes API server.

Tekton is the pipeline execution engine for Jenkins X. Like Jenkins X, Tekton is Kubernetes-native and extends Kubernetes using CRDs. Jenkins X leverages Prow, or Jenkins X’s own Lighthouse, to signal to Tekton to run builds. Lighthouse is a lightweight webhook handler, which listens for Git webhook events and uses them to trigger Tekton PipelineRun CRDs for Tekton to use to perform builds. Tekton then generates a status update which Jenkins X communicates back to source code management providers, such as GitHub.

The integration between Jenkins X as a CI/CD platform and Tekton as the execution engine for Jenkins X happens within Kubernetes using CRDs and the Kubernetes API. That both projects are Kubernetes-native enables them to seamlessly integrate using the Kubernetes API machinery.

“Tekton Pipelines lets us power Jenkins X’s execution and management of pipelines natively within Kubernetes.”

– Andrew Bayer, Software Engineer, CloudBees, and creator of Jenkins X Pipeline Syntax

CDF Newsletter – May 2020 Article

Subscribe to the Newsletter

Tekton is a project that evolved from an internal Google tool that used Knative to build and deploy software. In 2018, it was spun out as an independent project and donated to the Continuous Delivery Foundation.

The core component, Tekton Pipelines, runs as a controller in a Kubernetes cluster. It registers several custom resource definitions which represent the basic Tekton objects with the Kubernetes API server, so the cluster knows to delegate requests containing those objects to Tekton. These primitives are fundamental to the way Tekton works. Tekton’s building block approach starts with the smallest atom of work, the Step, aggregates Steps together in Tasks, and aggregates Tasks together in Pipelines.

If the nomenclature here feels confusing, don’t feel bad — it is complicated! Each tool in the space uses slightly different terms; this is something we’re working on standardizing in the CDF Interoperability SIG. We’d love your input – here’s how to participate! Tekton’s usage of these terms is clarified in the sig-interop Vocabulary definitions doc:

* *Step*: a specific function to perform.

* *Task*: is a collection of sequential steps you would want to run as part of your continuous integration flow. A task will run inside a pod on your cluster.

* *ClusterTask*: Similar to Task, but with a cluster scope.

* *Pipeline*: stateless, reusable, parameterized collection of tasks. Tasks are linked together in a Pipeline, which describes the end-to-end deployment for an application.

* *PipelineRun*: an instantiation of a Pipeline definition, filling in the Pipeline’s parameters with concrete values

* *Pipeline Resource*: objects that will be input to or output from tasks

* *Trigger*: Triggers is a Kubernetes Custom Resource Definition (CRD) controller that allows you to extract information from event payloads (a “trigger”) to create Kubernetes resources.

Notable omissions from the CRD list are “Steps”, which don’t have their own CRD because they’re the smallest unit of execution which are always contained inside a Task. The Conditions and Dashboard Extension CRDs are still optional and experimental — but very exciting!

Tekton’s approach is particularly interesting from a tool interoperability standpoint. By focusing on these building blocks and the concrete representation of them as declarative configuration, Tekton creates a standard platform for CD in the same way that Kubernetes provides a platform for application runtimes. This allows user-facing tools to build on the platform rather than reinventing these primitives. Several projects have already taken up this approach:

* Jenkins X uses Tekton as its execution engine. It’s been an option for a while now, but recently the project announced it was moving to using Tekton exclusively. Jenkins X provides pipeline definitions and gitops workflows that are tailored for cloud-native CD.

* Kabanero is a project that enables teams to develop and deploy applications on Kubernetes, so architects can provide pre-approved application stacks for developers to work from. It uses Tekton Pipelines and several associated projects like Tekton Dashboard and Triggers; indeed the developers building the Dashboard are largely working on Kabanero and the IBM Cloud Devops Pipeline product.

* Relay by Puppet is a hosted service that uses Tekton as the execution engine for event-triggered devops and deployment workflows. (Full disclosure, this is the product I am working on!) It provides a YAML dialect for building workflows that can be triggered by external events, via API, or manually, to automate tasks that need to stitch together different tools and services.

* TriggerMesh have integrated Tekton Pipelines into their TriggerMesh Cloud project and are working on a tool called Aktion to translate Github Actions into Tekton Pipelines.

* There are more, too! Check out the Tekton Friends repo for a longer list of projects and end users building on Tekton.

As exciting as this activity is, I think it’s important to note there’s still a lot of work to be done. There’s a distinct difference between two projects both using Tekton as a common upstream platform and achieving interoperability between them! It’s a big problem and it’s easy to get overwhelmed with the magnitude of the whole thing. One of my earliest lessons when I moved from SRE into product management was: focus first on solving the pain points which end users feel most acutely. That can be some combination of pervasiveness (what percent of the overall user base feels it?) and severity (how bad is each individual incident?) – ideally, fix the thing which is worst on both axes! From an end user’s standpoint, CD tools have a pretty steep learning curve with a bunch of pitfalls. A sampling of these severe-and-pervasive pitfalls I’ve heard from our users as we’ve been building Relay:

* How do I wrap my head around the terminology and technology so I can get started?

* How do I integrate the parts of the build/test/deploy toolchain my organization needs to continue using?

* How do I operate (upgrade, monitor, troubleshoot) the tool once it’s up and running?

Interoperability isn’t a cure-all, but there are definitely areas where it could work like a soothing balm on all of this pain. Industry-standard terminology or at a minimum, an authoritative Rosetta Stone for CD, could help. At the moment, there’s still pockets of debate on whether the “D” stands for Deployment or Delivery! (It’s “Delivery”, folks – when you mean “Deployment” you have to spell it out.)

Going deeper, it’d be hugely helpful help users integrate the tools they’re already using into a new framework. A wide ecosystem of steps that could be used by any of the containerized CD tools – not just those based on Tekton but, for example, Spinnaker and Keptn as well – would have a number of benefits. For end users, it would increase the amount of content available “out of the box”, meaning they would have less work to integrate the tools and services they need. Ideally, no end-user should have to create a step from scratch because there’s a vast, easily discoverable library of things that accomplish the job they have. There’s also a benefit to maintainers of services and tools that end-users want, like Kaniko, Gradle, and the cloud services, who have to build an integration with each execution framework themselves or rely on the community to do it. Building and maintaining one reusable implementation would reduce the maintenance burden and allow them to provide higher quality.

To put on my Tekton advocate hat for a moment, its well-defined container contract makes it easy to use general-purpose containers in your pipeline. If you want to take advantage of more specialized features the framework provides, the Tekton Catalog has a number of high-quality examples to build from. There are improvements on the way to aid the discoverability and reuse parts of the problem, such as the exciting new Tekton Hub donated by Red Hat.

The operability concerns are a real problem for CD pipeline tools, too. Although CD is usually associated with development, in many organizations the tool itself is considered a production service, because if there are problems committing, building, testing, and shipping code, the engineering organization isn’t delivering value. Troubleshooting byzantine failures in complex CI/CD pipelines is a specialized discipline requiring skills that span Quality Engineering, SRE, and Development. The more resilient the CD tools are architected, and the more standard their interfaces for reporting availability and performance metrics, the easier that troubleshooting becomes.

Again, to address these from Tekton’s perspective, a huge benefit of running on Kubernetes is that the Tekton services that run in the cluster can take advantage of all the powerful k8s operability features. So fundamental capabilities that are highly valuable to operators and troubleshooters like log aggregation, in-place upgrades, error reporting, and scale-out all ride on top of the Kubernetes infrastructure. It’s not “for free” of course; nothing in distributed systems is ever truly “for free” and if anyone tries to tell you otherwise, the thing they’re selling you is probably *very* expensive. But it does mean that general-purpose Kubernetes skills and tooling goes a long way towards operating Tekton at scale, rather than having to relearn or reimplement them at the application layer.

In conclusion, I’m excited that the interoperability conversation is well underway at the CDF. There’s a long way to go, but the amount of activity and progress in the space is very encouraging. If you’re interested in pitching in to discuss and solve these kinds of problems, please feel free to join in #sig-interoperability channel on the CDF slack or check out the contribution information.

Originally posted on Medium by community member, Andreas Grimmer

Continuous Delivery (CD) and Runbook Automation are standard means to deploy, operate and manage software artifacts across the software life cycle. Based on our analysis of many delivery pipeline implementations, we have seen that on average seven or more tools are included in these processes, e.g., version control, build management, issue tracking, testing, monitoring, deployment automation, artifact management, incident management, or team communication. Most often, these tools are “glued together” using custom, ad-hoc integrations in order to form a full end-to-end workflow. Unfortunately, these custom ad-hoc tool integrations also exist in Runbook Automation processes.

Not only is this approach error-prone but maintenance and troubleshooting of these integrations in all its permutations is time-intensive too. There are several factors that prevent organizations from scaling this across multiple teams:

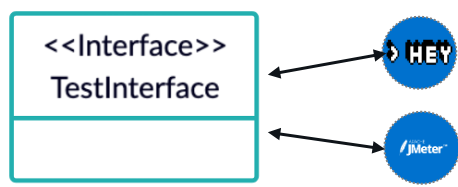

Let’s imagine an organization with hundreds of copy-paste pipelines, which all contain a hard-coded piece of code for triggering Hey load tests. Now this organization would like to switch from Hey to JMeter. Therefore, they would have to change all their pipelines. This is clearly not efficient!

In order to solve these challenges, we propose introducing interoperability interfaces, which allow abstract tooling in CD and Runbook Automation processes. These interfaces should trigger operations in a tool-agnostic way.

For example, a test interface could abstract different testing tools. This interface can then be used within a pipeline to trigger a test without knowing which tool is executing the actual test in the background.

These interoperability interfaces are important and this is confirmed by the fact that the Continuous Delivery Foundation has implemented a dedicated working group on Interoperability, as well as the open-source project Eiffel, which provides an event-based protocol enabling a technology-agnostic communication especially for Continuous Integration tasks.

By implementing these interoperability interfaces, we define a standardized set of events. These events are based on CloudEvents and allow us to describe event data in a common way.

The first goal of our standardization efforts is to define a common set of CD and runbook automation operations. We identified the following common operations (please let us know if we are missing important operations!):

For each of these operations, an interface is required, which abstracts the tooling executing the operation. When using events, each interface can be modeled as a dedicated event type.

The second goal is to standardize the data within the event, which is needed by the tools in order to trigger the respective operation. For example, a deployment tool would need the information of the artifact to be deployed in the event. Therefore, the event can either contain the required resources (e.g. a Helm chart for k8s) or a URI to these resources.

We already defined a first set of events https://github.com/keptn/spec, which is specifically designed for Keptn — an open-source project implementing a control plane for continuous delivery and automated operations. We know that these events are currently too tailored for Keptn and single tools. So, please

In order to work on a standardized set of events, we would like to ask you to join us in Keptn Slack.

We can use the #keptn-spec channel in order to work on standardizing interoperability interfaces, which eventually are directly interpreted by tools and will make custom tool integrations obsolete!

Continuous Delivery Foundation (CDF) Governing Board (GB) agreed to and ratified 9 strategic goals in early October 2019. One of the strategic goals identified by CDF GB is fostering tool interoperability.

Recognition of the importance of interoperability and identifying it as one of the strategic goals is a very important step for CDF to take for users. Users and organizations employ various CI/CD tools and technologies depending on their needs and where they are in their CI/CD transformation. Organizations often employ more than one tool in various stages of their CI/CD pipelines due to different capabilities provided by the tools and this is perhaps one of the biggest benefits users get by using open technologies for their CI/CD needs. For example, CDF member Salesforce has over 20 different CI/CD tools internally thanks to acquisitions and different requirements in teams.

However, one of the challenges users face is the lack of interoperability across the CI/CD tools and technologies, resulting in various issues while constructing and running pipelines such as passing metadata and artifacts between the tools or achieving traceability from commit to deployment. Often users end up building their “own glue code” to address what is a common problem, further complicating moving from one tool to another and adopting new technologies and methodologies.

These “glue code solutions” are generally specific to users’ needs and tools rather than being loosely coupled and agnostic to tooling and technology. Additionally these solutions are not visible to other users and the communities, making them vulnerable to the risk of outage in their CI/CD pipelines due to potential changes (i.e. non-backward changes to the APIs, changes in data models) that happen to the tools in respective projects.

Therefore, focusing on tool interoperability is critical.

There has been significant collaboration going on in this area. Linux Foundation Networking (LFN), OpenStack Foundation (OSF), and Cloud Native Computing Foundation (CNCF) projects have done a lot to raise awareness of CI/CD interoperability challenges. In addition to these communities, Spinnaker, Jenkins, Tekton, and Jenkins X, CDF founding projects, have been collaborating and sharing ideas. However, there are many more users, projects and communities, either looking for answers to similar interoperability challenges, on their way to developing solutions, or simply trying to find like minded people to work with together.

We believe the work should happen in a neutral forum where users come together with maintainers of open source CI/CD projects and have a dialog about the challenges we need to address.

Which is why the CDF Interoperability SIG was launched, led by Fatih Degirmenci of Ericsson and with support from representatives from Netflix, Google, China Mobile, CloudBees and others.

We, the CDF Interoperability SIG, aim to provide such a forum and enable a dialog around interoperability in order to:

Membership to the Interoperability SIG is open to the public. We invite users and contributors to open source CI/CD projects to join us to share ideas, use cases, challenges, and solutions with each other.

Here are some of the ways you can take part in the Interoperability SIG and start collaborating:

Finally, we would like to thank everyone who has listened to our ideas, shared their thoughts, taken part in crafting the proposal, and most importantly, encouraged us with their +1s!