The CDF Ambassadors provide collective wisdom through our collaborative blogs. “Future trends for Continuous Delivery” is the first in this series.

With the advancement of Continuous Delivery practice, tools and processes which support the movement, I would like to explore Continuous Delivery as a platform where we start to make decisions through a well-architected platform for Continuous Delivery. Though a well-architected platform seems straightforward, it has to be shaped carefully and designed to enable faster, safer and more secure software products. The platform itself must provide flexibility, choice and be designed to minimize our past biases.

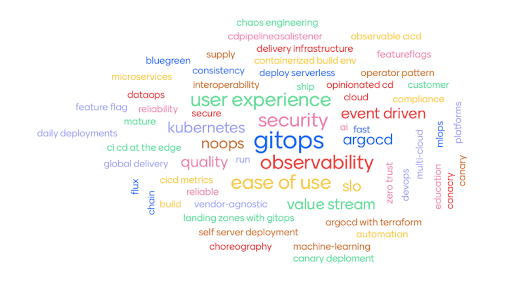

Many thought-provoking ideas are presented by our ambassadors in this blog, which can act as a catalyst for such a platform for Continuous Delivery. Some of the key features to consider for future enabling the Continuous Delivery as a platform could be as follows, but not limited to:

- Event-driven Continuous Delivery orchestration as a feature

- Interoperability, enabling an ecosystem of Continuous Delivery applications & tools

- Present & visualize a system of SLOs & SLA integrated to rank and model the reliability of the Continuous Delivery

- Ultimately pushing the boundaries of Continuous Delivery to a more user-centric approach, where organizations present choices rather than enforcing them

– Ambassador Chair [Garima Bajpai]

Here are the opinions of the CDF Ambassadors on Future Continuous Delivery Trends (in no particular order):

Ambassador: Tracy Ragan

Expect a Major Redesign in Continuous Delivery

In technology, disruption is normal. We should not be surprised that it is time for a remodel. Jenkins, originally Hudson, is almost 20 years old. Many aspects of our software development practices have changed over those 20 years. While we were able to enhance our CD workflows to address most of those changes, moving to a cloud-native technology where microservices are key requires more than a few tweaks.

The biggest change we will see in the very near future is the number of code updates moving to production environments. We thought that ‘agile’ practices increased our velocity, so we’ll wait and see what a microservice architecture will do. Think moving many pieces to various environments all day long. As we start down this journey, we will learn that separating the ‘data’ from ‘definition,’ and moving into more declarative practices will be key. In addition, new ways of managing the software supply chain, generating SBOMs, tracking CVEs and licensing, aggregated up to the ‘logical’ application level will become more challenging. With a microservice implementation, the concepts of application versions and SBOMs are more difficult to manage, just when we have been told that SBOMs are essential to harden cybersecurity. We will need methods of microservice governance.

In this new CD methodology, we will begin seeing a shift to ‘event-driven’ pipelines, managed with policies. This new event-based CD means less time building out scripted pipeline processes including tighter audits of the workflow itself. The introduction of microservice catalogs will be essential in tracking changes and having the visibility to determine the impact of an update before it is deployed.

And finally, remember that not all microservices are the same. Our pipeline will become more dynamic, catering to the risk level of a microservice. Dynamic pipelines will allow us to move low-impact microservices quickly, while treating high-risk microservices with a more cautious approach. The good news is that DevOps will continue to build and grow, and DevOps professionals will always find a place to serve.

Ambassador: Andreas Grabner

Continuous Delivery has already adopted a lot of development principles such as

- modularization of code (look at your pipelines)

- focusing on APIs for better integrations (look at all the tools you integrate through APIs)

- or version control (look at where your configuration is stored)

But development has evolved as architectures moved from monoliths to microservices and as “the cloud” has brought us a lot of “Everything as a Service”.

Continuous Delivery needs to evolve in the same way to keep up with the new demands of development:

- We need to think about event-driven delivery orchestration just as microservices get orchestrated through events.

- We need to establish standards for interoperability that allow us to easily connect our existing tools or switch to new ones when needed. Otherwise, we will keep building and maintaining very specific point-2-point (tool-2-tool) integrations.

And we need to think about how to leverage observability to ensure optimal operations of our delivery tooling as well as using context-enriched data to automated delivery decisions such as “promote or not” or “keep or rollback”

Ambassador: David Espejo

Continuous Delivery is all about designing, adopting or distributing the necessary patterns, tools and processes to help developers and operators to deliver secure and stable code to production environments with high levels of automation, compliance and reusability. Nevertheless, most of the tools, processes and patterns still reflect legacy approaches that hinder the agility required by modern software.

Such is the case of the orchestration pattern, widely implemented in today’s Continuous Delivery space, where each one of the resources in the software supply chain knows how to complete their tasks but they have a dependency on a central orchestrator which controls the flow of information between resources and also deals with the actual implementation of each step on the path-to-production.

Adopting patterns from Event-Driven Architecture can help unlock greater agility, reliability, and separation of concerns, among other benefits. The choreography pattern is a good example because it assumes that resources (this is, steps in your software supply chain) are autonomous and largely independent from each other and the only connection between them is a subscription to a specific type of output(s) which, once generated by one resource, are captured by the following resource, who knows exactly what to do then.

It can provide a sufficient set of abstractions that provide the standardization a platform operator would expect when defining the components of a supply chain, while also providing enough flexibility to developers who will see a highly opinionated platform and a very simple contract with the underlying platform, making it so much easier to hot-swap tools at specific steps in the supply chain for different workload needs.

Only time will tell how widely this kind of agile and if truly distributed patterns will be adopted in the CD market, but it’s an exciting time to be alive!

Ambassador: Kevin Collas-Arundell

Deploy on Friday (AKA: Read Only Friday Considered Harmful)

It sounds extreme, but before you skip past it, consider this challenge. Poll your team, what’s the first feeling that each of you has after a deployment? Fear or excitement? horror or happiness? On a scale of 1 to 10 would you want to be on call after a deployment?

This challenge is an important part of measuring your Continuous Delivery pipelines, processes and tools. We know that every deployment is a risk. Every time we push, we need to look at our tools and processes and know that they work as well as they can. This subject has been explored by Dr. Nicole Forsgren, Jez Humble and Gene Kim. They found that cycle time, deployment frequency, change failure rate and mean time to recovery are closely related and correlate with other beneficial metrics like delivery success and profitability. We know our work isn’t done until it’s in production and much like Continuous Integration we become safer, faster and better when we ship smaller releases more often.

If we are confident in our processes when we press the big red button, then we are good to go. Ship all the shiny new things! Of course, there will be problems for some of us. Many don’t have smooth pipelines that are reliable. We don’t have organizational cultures that support frequent delivery. When we come to this situation this challenge can rightly make you scared. When we are scared of deploying software, something needs to be done.

This is when we slow down or stop our feature delivery and work on enabling our confidence. Making our pipelines more resilient, or our database migrations more stable. Fixing those weird crashes on new deployments or implementing feature flags at last. Because of the importance of the key metrics found by Forsgren, we know that a large part of the next 10 years of Continuous Delivery will be similar to the last. Delivering small continuous improvements of our paths to production to ensure that we are:

- Delivering sooner so we can deliver faster

- Delivering faster so we can deliver often

- Delivering often so we can deliver safer

- Delivering safer code so we can deliver sooner

Ambassador: Michel Schildmeijer

Observability in CD

As Continuous Delivery becomes a topic, a lot is heard about “deliver software faster”. Now not every company is like Spotify, Netflix or Facebook, which maybe have releases every hour.

If you look at the majority of companies, standardization, stability, flexibility and continuity are more important than just speed. Yet, agility and speed of application delivery are now a matter of life and death for many companies.

This might introduce an increase in undetected errors. Despite previous investment in monitoring and troubleshooting tools, enterprises continue being confronted with application instability and outages that are costing them topline revenue and potential brand impact.

To respond to this combination of challenges, infrastructure and operations and development teams should rethink the way they manage applications and services, and it is time for a

generational leap in the capabilities that I&O teams should expect from management solutions.

Agile and DevOps techniques have pervaded software development, but a great number of organizations still do not have end-to-end visibility. Too often, DevOps is

implemented only halfway where it sometimes stops in continuity and ends up in a lack of visibility into rapidly changing application topologies. Add up application architectures become drastically changing, for instance, change to a more CloudNative, or maybe microservice oriented one, visibility on every point in developing, building and deploying software becomes of major importance.

Therefore, a trend I foresee is more and more involvement of observability, which translates into monitoring, analytics and telemetry tools to be a part of a CD pipeline. Opentelemetry, OpenTracing are a few of the Open Source solutions which address these concerns and therefore should be included in the entire development and software delivery.

Ambassador: Giorgi Keratishvili

- DevSecOps: integrating more security tools and strategies as supply chain security is becoming an issue.

- KPIs: Creating the metrics to measure how the DevOps team is working, improving and cutting downtime, the biggest cost of any time is mostly engineering time and business loves numbers

- GitOps: as the industry progresses the de facto standard in DevOps space is making the environment describe in any text-based version which gives us ability to showcase desired configuration and it makes auditing it easier for a regulated environment

- Configuration as Code: same as above

Ambassador: Ricardo Castro

Building and releasing software is complex. Teams want to build software faster. Organizations want to get their products in front of users as soon as possible. To stay competitive, companies invest in automation. To that end, many of them started moving their pipelines to some form of CI/CD.

While your mileage may vary, once you have experienced CI/CD, you don’t want to go back. But what comes next? What are some of the trends that are emerging or making themselves universal?

Although GitOps has been around for a while, it will become ubiquitous. Treating your SDLC as code allows you to adopt well-established software engineering practices. At the same time, it paves the way for Progressive Delivery. Canary Releases, Blue/Green deployments or Feature Flags will become standard practices.

An interesting trend to keep an eye on is the increasing influence of AI and ML in software delivery. They can augment linters, static and dynamic analysis to increase software quality. They can also help detect patterns for potential system issues.

The adoption of eBPF will gain traction and influence how teams monitor and deliver software. Its observability capabilities will provide valuable insights to help teams make decisions.

Global software delivery (e.g. multiple locations around the globe) will become increasingly more important. Operating in a global market will push teams to build and deliver software capable of its unique demands.

Many of these trends will work together. Teams will be able to deliver high-quality software faster.

Ambassador: Rob Jahn

Progressive delivery promises to bring faster delivery, improve innovation and reduce user impact through smaller rollouts, automation, and deployment strategies such as feature flags. But with this new speed, teams still must ensure:

- Operational oversight of releases and versions

- Performance and security service levels

- Observability tooling is in-sync with release changes

- Release problems are detected and resolved quickly

To address this, automated observability and quality controls that reduce risk and the time taken for manual analysis and intervention will mature and become “must-have” capabilities.

Specific capabilities include:

- Self-service GitOps based monitoring configuration that keeps observability up to date with evolving software requirements.

Defining what to monitor and what to be alerted on will be done by developers by checking and monitoring configuration files into version control systems along with applications source code. With each “Commit” or “Pull Request” code gets built, deployed and automatically monitored with dashboards, tagging and alerting rules. This approach allows SRE teams to establish guardrails and standards while allowing Dev teams to have the flexibility to control the specific settings using their know-how.

- Automated gathering and evaluation of technical, operational, and business Service Level Indicators (SLIs) that can stop Continuous Delivery pipelines if Service Level Objectives (SLOs) are violated.

The concepts of SLIs and SLOs as defined in the Google SRE handbook will be implemented for every release in every pipeline. Service levels will be declarative and done by developers within version control systems along with the application’s source code. Automated SLO evaluations for a release will detect errors and drive automation to notify teams, update tickets, and promote releases. This “shift-left” capability will harness the metrics generated by applications, end-user, and infrastructure observability platforms to ensure new releases meet the objectives of all Biz, Dev, and Ops stakeholders.