CDF Newsletter – June 2020 Article

Subscribe to the Newsletter

Contributed by Marky Jackson, CDF Ambassador

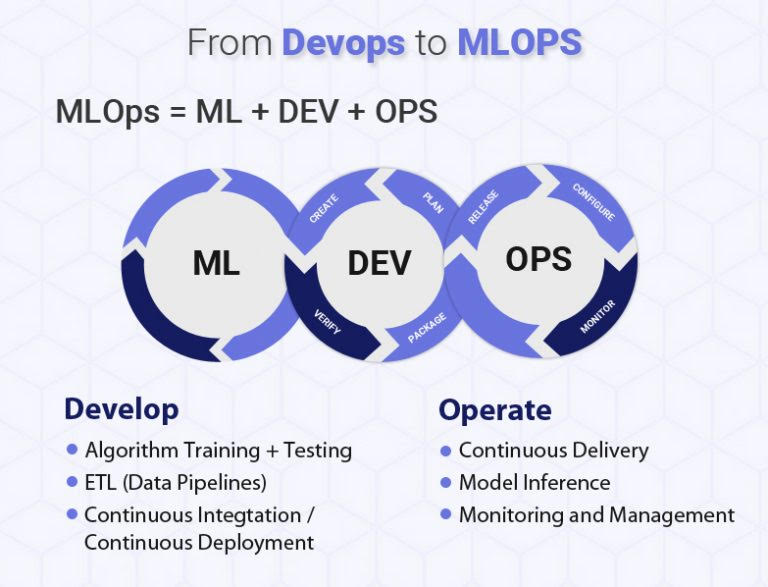

“MLOps (a compound of Machine Learning and “information technology OPerationS”) is a new discipline/focus/practice for collaboration and communication between data scientists and information technology (IT) professionals while automating and productizing machine learning algorithms.”

— Nisha Talagala (2018)

Machine learning is a method of data analysis that automates analytical model building. It is a branch of artificial intelligence based on the idea that systems can learn from data, identify patterns, and make decisions with minimal human intervention.

To integrate a machine learning system in a production environment, you need to orchestrate the steps in your machine learning pipeline and to automate the pipeline’s execution for the continuous training of your models. To experiment with new ideas and features, you need to adopt CI/CD practices in the new implementations of the pipelines.

Consistency

For any engineering organization, it would not be a big deal to view the components of a release from a year ago and re-deploy the identical version into production. Also, the likelihood of duplicating a machine learning model from a year ago with the lack of adequate tooling is more than likely. This means having the ability to trace all of the inputs: dataset, code, container/runtime, model-training parameters, just to name a few.

No matter the reason, consistency is paramount. The path towards machine learning can be viewed as a paradigm focus from ad-hoc processes to a deterministic way of working.

Cooperative

Let’s say, for example, you are working on a python project, and the work you are doing is on a siloed EC2 instance. If you’re an individual member, you can get away with this, but when you put a model into production or have a handful of people working on a team, this plan is extremely brittle. The absence of a collaborative environment becomes especially problematic as the number of models and the complexity of models increases.

In theory, collaboration begins with having a unified hub where all activity, lineage, and model performance can be tracked. That isn’t limited to training runs, jupyter notebooks, hyperparameter searches, visualizations, statistical metrics, datasets, code references, and a repository of model artifacts or model repository, as it is often called. Adding in things like access control and audit logging is also a critical step.

At the end of the day, insight and collaboration are essential to machine learning teams.

Infrastructure Construct

In contrast with software engineering, machine learning in practice requires a large and sometimes massive amount of computational power, storage, and, most times, snowflake infrastructures. Things like GPUs and TPUs. Machine learning teams need an infrastructure that offers easy to schedule and scale workloads without needing years of experience in networking, Kubernetes, Docker, storage systems.

A few examples of infrastructure automation:

- Multi-cloud: A machine learning platform should be easy to train a model on-premise and seamlessly deploy it to the public cloud.

- Scaling workloads: As computational demands increase, training or tuning models that span multiple compute instances becomes essential—shared storage volumes designed with a distributed host of containers running on heterogeneous hardware.

Ideally, machine learning teams should operate with full autonomy and own the entire stack; that way, they are much more agile. Data scientist’s demands for computing and storage to move quicker in the training phase of their work are also needed. With MLOps, the training phase is infrastructure-agnostic and scalable and minimizes complexity for the data scientist.

Unbroken

We are now seeing that there is very little in the way of automation in the production of machine learning models.

CI/CD is where a software developer’s code is merged to trigger a series of automation steps. Abstractions like these are critical to the reliability of applications and developer velocity. Sadly, there is no equivalent to many of the abstractions in the machine learning world.

Closing

Ultimately, the time it takes to move from concept to production and deliver business value is a significant hurdle in the industry. That’s why we need good MLOps that are designed to standardize and streamline the lifecycle of machine learning in production.

DevOps as a practice ensures that the software development and the IT operations lifecycle is efficient, well documented, scalable, and easy to troubleshoot. MLOps incorporates these practices to deliver machine learning applications and services at high velocity.

There is some interesting work being done in the field of MLOps. The Google Summer of Code for the Jenkins project. The project involves interaction between a Jenkins node and an IPython Kernel as well as builds a Jenkins plugin that will allow the integration of existing polyglot notebook kernels to support notebook – like computations as Jenkins build tasks. The goal is to allow data scientists to define production-grade MLOps as Jenkins builds, integrating typical Jenkins build tasks with notebook code fragments as build steps. The plugin will support a new build task, to execute code via an appropriate language ‘kernel’ as currently supported by existing notebooks such as Zeppelin and Jupyter. You can read more about that here.

The Continuous Delivery Foundation is working to define the general DevOps drivers that apply to MLOps as well as define the drivers that are unique to machine learning solutions that represent MLOps requirements.

More regarding the roadmap can be found here.

If you would like to learn more or become involved, please do join us. Information about regular meetings can be found here.