Contributed by: Anna Jacobi

Artificial Intelligence is in full “sugar rush” mode. Executives chase transformation. Headlines buzz with AI hype. Demos dazzle. But underneath all the sweetness, the structure isn’t always in place. Frosting doesn’t hold a cake together—neither does AI alone. The structural integrity comes from the sponge: infrastructure, pipelines, processes, governance. Skip the middle layer, and your system will fall apart.

This article focuses on that middle—the foundational systems that CI/CD practitioners have emphasized for years: speed without security is fragile, automation without observability is dangerous, and delivery without trust is brittle. As AI heats up, it’s time to recenter the conversation on the middle of the cake—the infrastructure, processes, and federated trust layers that make AI durable, repeatable, and real.

AI as the Sugar Rush

AI has sped up digital transformation. It democratized complex workflows—code generation, synthetic data, real-time decision support—in a browser. It delivers powerful capabilities and seemingly instant productivity.

But like sugar, it’s addictively appealing without being nourishing. Teams chase shiny prototypes. Executives green-light AI pilots while ignoring pipeline hardening. Suddenly, “AI-powered” is stamped on solutions that collapse under real-world demands.

The sugar rush is real—but crashes follow. Models hallucinate. Pipelines lack provenance. Deployments sidestep governance. Trust erodes. A model without delivery discipline is just frosting, no cake.

The Middle of the Cake: Infrastructure and Delivery

What actually keeps AI systems standing are the elements people rarely mention:

- Pipelines (CI/CD): Automated flow for code, data, and models from dev to prod. Without them, AI is artisanal—not scalable.

- Observability: Logs, metrics, traces—and especially model telemetry. AI systems are probabilistic; without visibility, drift and bias go undetected.

- Governance: Compliance, access control, audit trails. Absent these, AI at scale is a liability.

- Knowledge Management: Recipes, playbooks, federated exchanges—so teams reuse, not recreate.

In simple terms: CI/CD is the sponge, trust is the egg, governance is the oven—and AI is the sugar.

This has been the Continuous Delivery Foundation’s message since before AI was in vogue. Jenkins, Tekton, and Spinnaker were never about frosting. They were about building infrastructure that made delivery safe, repeatable, and fast. Today, those same principles now govern not just software deployments but also models, datasets, and agents.

Acceleration Without Foundation

The pandemic compressed a decade of digital change into two years. Then AI supercharged it. But fast-forward on shaky ground? That’s a disaster in the making.

Consider:

- Hallucinations at scale. Without observability, it’s impossible to spot bad outputs pouring through AI.

- Shadow deployments. Notebook experiments reaching production without review expose systems.

- Lost provenance. No metadata trails—no way to answer auditors, customers, regulators.

When you pour sugar on a crumbling sponge, you don’t get innovation—you get collapse.

Trust Is the Missing Ingredient

Trust isn’t a checkbox—it’s baked in.

Trust in AI comes from:

- Provenance: Knowing exactly where models, data, agents originated.

- Repeatability: Rerun the pipeline, get the same results.

- Observability: Detect drift, bias, failures.

- Federation: Share AI capabilities securely, without losing control.

The federated exchange model we’ve promoted emphasizes distributed trust: not hoarded central systems, but interoperable trust layers. If AI is sugar, the oven needs temperature control, and the recipe must be verified.

Real-World Case Studies

Case 1: Air Canada Chatbot — Sugar Without Structure

In early 2024, Air Canada’s chatbot misinformed a customer that bereavement fares could be claimed retroactively. When the customer requested a refund, the airline denied it—and even claimed the chatbot was a “separate legal entity.” A tribunal ruled otherwise, holding Air Canada responsible and ordering a refund of C$650.88. Read The Guardian article.

On the surface, this looks like a minor customer service issue. But underneath, it revealed a collapsed middle layer:

- No provenance: The airline couldn’t demonstrate how the chatbot was trained or what policies it enforced.

- No accountability: By treating the chatbot as an independent entity, Air Canada highlighted the absence of governance guardrails.

- No observability: The system didn’t monitor for hallucination or misinformation in customer interactions.

This wasn’t a failure of AI capability—it was a failure of delivery discipline. Without the middle of the cake, the sugar melted into liability.

Case 2: Capital One’s MLOps Pipeline — A Well-Baked Cake

Capital One has institutionalized MLOps. Their teams treat AI as part of delivery pipelines, not as a bolt-on experiment. Models are developed, tested, and deployed using the same CI/CD principles that govern software delivery. Automated checks, observability hooks, and governance policies are baked into every step. Read the TechTarget article.

Key practices stand out:

- Repeatable pipelines: ML models move from dev to prod through Tekton-style workflows—no ad hoc scripts.

- Observability as design: Metrics track model drift, performance, and fairness, providing early warning signals.

- Governance in code: Role-based access controls and audit trails ensure compliance is enforced automatically.

- Federated trust: Teams can interoperate across business units without centralizing risk, because the pipelines themselves enforce trust.

The result? Models that scale safely, withstand regulatory scrutiny, and earn customer confidence. This isn’t frosting on a shaky cake—it’s sugar resting on a well-structured sponge.

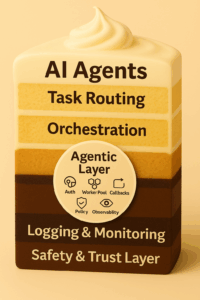

The Agentic Layer

AI isn’t just delivering predictions—it acts. Agents call APIs, orchestrate tasks, make decisions. If an agent triggers a deployment or launches a configuration change, CI/CD safeguards must protect the system.

This is where the Agentic Layer comes in—the middleware that mediates between AI models and infrastructure. It enforces pipeline discipline, governance, and trust. Not glamorous, but essential. The difference between brittle hype and sustainable transformation.

Lessons from Continuous Delivery

The CD community has lived this before. We know that:

- Automation without trust breeds fragility. Scripts fail silently; pipelines must be observable.

- Speed and security aren’t opposites. Done right, they reinforce each other.

- Open source requires stewardship. Tens of millions of deployments don’t happen on goodwill alone.

- Delivery is not an afterthought—it defines value.

Applying these lessons to AI:

- AgentOps is CI/CD for AI. Pipelines don’t change—only the artifacts do.

- DataOps and MLOps belong inside CD. Not parallel silos, but merged flows.

- Security is always on. Supply chain checks, policy-as-code, and zero trust become essential.

What’s missing in many AI initiatives today is discipline. Everyone wants the sugar, but few invest in the oven, recipe, and process. Continuous delivery practices give us the blueprint: systems that can scale, adapt, and recover under pressure.

Recommendations for Leaders

If your strategy is “just add AI,” you’re chasing sugar. Focus instead on reinforcing the cake:

- Invest in pipelines, not pilots. Pilot projects generate excitement but little durability. Pipelines create repeatable pathways for experiments to become production. Fund infrastructure, not one-off demos.

- Bake in provenance. Every model, dataset, and deployment should carry metadata: who built it, with what data, when it was deployed, and under what policies. Treat metadata as non-optional.

- Federate, don’t centralize. Monolithic AI systems create risk concentration. Federated exchanges distribute trust, allow for collaboration across boundaries, and prevent single points of failure.

- Empower DevSecOps. Security must be continuous, automated, and embedded. SBOMs, signature verification, and role based controls must be part of delivery pipelines, not post-hoc reviews.

- Measure drift, not hype. Track bias, hallucination, and data freshness with the same rigor we apply to uptime and latency. Observability must extend beyond system health into model health.

- Prioritize sustainability. AI workloads are resource-intensive. Incorporate energy and water usage metrics into observability dashboards. Optimize for efficiency as well as accuracy.

- Invest in knowledge management. Federated playbooks, shared recipes, and reusable pipeline components reduce redundancy and error. In a world of agents, institutional memory is critical.

If AI is sugar, delivery is the cake. Don’t eat the frosting for dinner.

Conclusion: Don’t Forget the Middle

Digital revolutions succeed on layers: hardware enabled software; cloud enabled apps; AI enabled decisions. But each layer only holds if infrastructure underneath is strong.

AI is sugar—irresistible, fast, powerful. But without CI/CD, governance, and trust—the middle—the system collapses.

The Continuous Delivery Foundation exists because the middle matters. Let’s remind the industry: don’t live on frosting. Bake the cake. Strengthen the sponge. Preheat the oven. Then—and only then—enjoy your sugar.